Models / minimaxaiMiniMax / / MiniMax M1 80K API

MiniMax M1 80K API

456B-parameter hybrid MoE reasoning model with 80K thinking budget, lightning attention, and 1M token context for complex problem-solving and extensive reasoning.

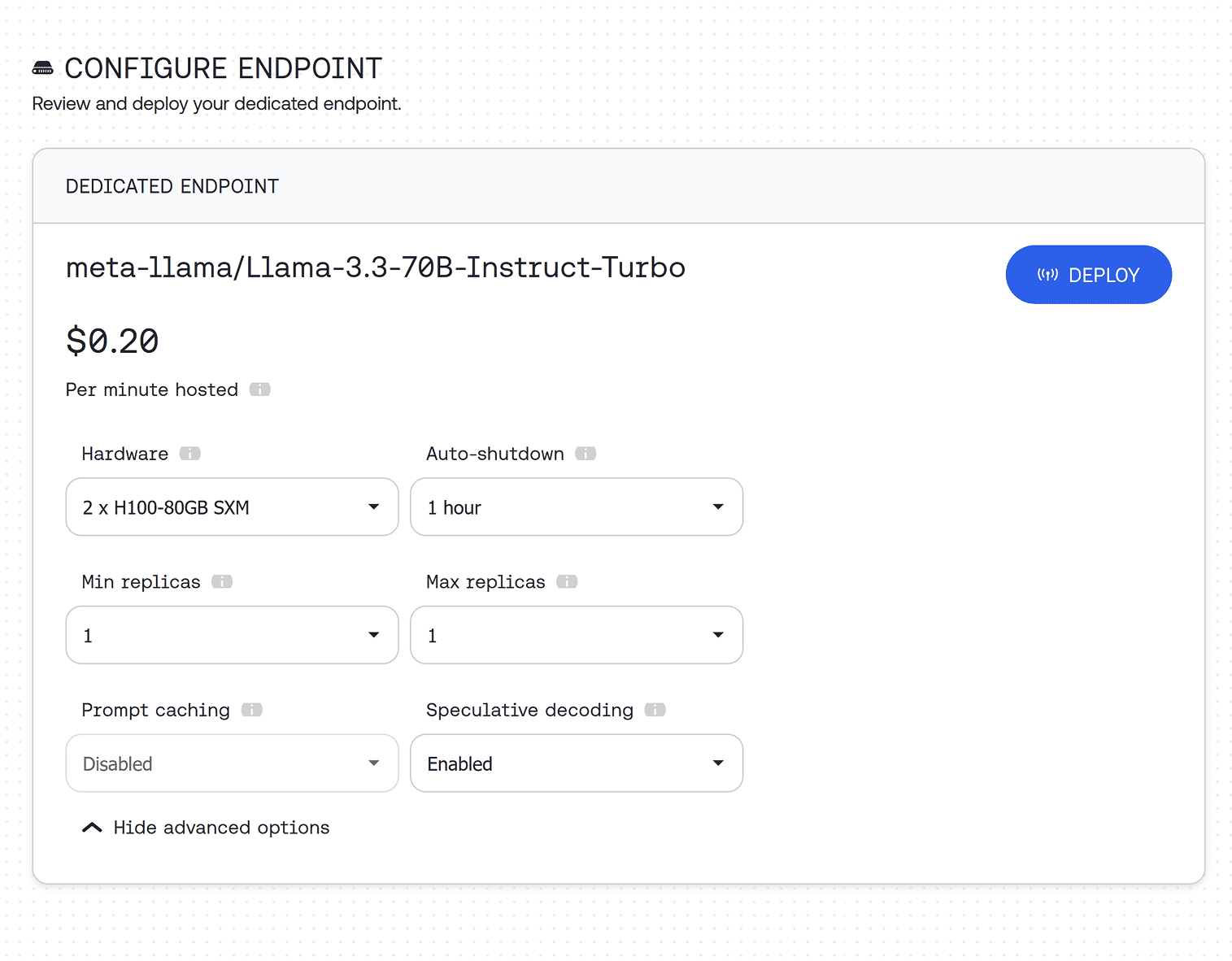

To run this model you first need to deploy it on a Dedicated Endpoint.

MiniMax M1 80K API Usage

Endpoint

RUN INFERENCE

RUN INFERENCE

RUN INFERENCE

How to use MiniMax M1 80K

Model details

Architecture Overview:

• Hybrid Mixture-of-Experts with 456 billion total parameters and 45.9 billion activated per token

• Revolutionary lightning attention mechanism enabling efficient test-time compute scaling

• 1 million token context window - 8x larger than DeepSeek R1 for extensive document processing

• Advanced hybrid attention design optimized for reasoning and long-context understanding

Training Methodology:

• Large-scale reinforcement learning on diverse problems from mathematical reasoning to software engineering

• CISPO algorithm for clipping importance sampling weights instead of token updates

• 80K thinking budget for extended reasoning capabilities and complex problem-solving

• Trained on sandbox-based real-world software engineering environments

Performance Characteristics:

• Consumes 25% of FLOPs compared to DeepSeek R1 at 100K token generation

• Outperforms DeepSeek-R1 and Qwen3-235B on complex software engineering and tool use

• Superior performance on AIME 2024 (86.0), SWE-bench Verified (56.0), and long context tasks

• Optimized for complex tasks requiring extensive reasoning and long input processing

Prompting MiniMax M1 80K

Reasoning Capabilities:

• Advanced reasoning model with 80K thinking budget for complex problem-solving

• System/user/assistant format optimized for extensive reasoning chains

• Lightning attention mechanism enables efficient scaling of test-time compute

• Particularly suitable for tasks requiring processing long inputs and thinking extensively

Optimization Settings:

• Temperature 1.0 and top_p 0.95 for optimal creativity and logical coherence

• General scenarios: "You are a helpful assistant"

• Mathematical tasks: "Please reason step by step and put your final answer within \boxed{}"

• Web development: Detailed engineering prompts for complete code generation

Advanced Features:

• Function calling capabilities for structured external function integration

• Supports extensive multi-turn conversations with maintained context

• Efficient reasoning budget allocation for optimal performance vs cost balance

• Superior performance on competition-level mathematics and complex coding tasks

Applications & Use Cases

Advanced Reasoning Applications:

• Competition-level mathematics and complex mathematical problem-solving

• Software engineering tasks including SWE-bench verified challenges

• Long-context document analysis and processing with 1M token capability

• Complex agentic tool use and multi-step reasoning scenarios

Technical & Research:

• Real-world software engineering environments and sandbox-based development

• Advanced coding assistance with extensive reasoning capabilities

• Research applications requiring deep analysis and extended reasoning chains

• Complex problem-solving in STEM fields requiring step-by-step reasoning

Enterprise Applications:

• Next-generation language model agents for complex real-world challenges

• Advanced AI systems requiring efficient test-time compute scaling

• Applications demanding extensive reasoning with computational efficiency

• Complex decision-making systems with long-context understanding and analysis