NVIDIA Blackwell platform has arrived on Together AI

Train trillion-parameter models on NVIDIA GB200 NVL72 GPU clusters, powered by our research and expert ops.

Why NVIDIA GB200 NVL72 on Together GPU Clusters?

The world’s most powerful AI infrastructure. Delivered faster. Tuned smarter.

Train trillion-parameter models on a single, unified NVLink domain

NVIDIA GB200 NVL72 connects 72 Blackwell GPUs and 36 Grace CPUs into one liquid-cooled, memory-coherent rack — enabling tightly synchronized, low-latency training at massive scale.

Custom networking over 130TB/s intra-rack bandwidth

We extend GB200’s 5th-gen NVLink performance with tailored fabric designs — Clos topologies for dense LLMs & oversubscription for MoEs, built on InfiniBand or high-speed Ethernet with full observability.

AI-native shared storage built for checkpoints at scale

Whether you’re running long-horizon training or restarting from a failure, we support AI-native storage systems like VAST and Weka for high-throughput, parallel access to massive datasets and model state.

NVIDIA Grace + Blackwell orchestration, pre-integrated

We validate your Slurm or Kubernetes stack on the actual NVLink and NVSwitch layout to avoid job placement issues and scheduling inefficiencies.

Delivery in 4–6 weeks, no NVIDIA lottery required

We ship full-rack NVIDIA GB200 NVL72 clusters — not just dev kits — with thousands of GPUs available now. You don’t wait on backorders. You start training.

Run by the same people pushing the Blackwell stack forward

Work with engineers who co‑develop Blackwell optimizations; our team continually tunes workloads and publishes cutting‑edge training breakthroughs.

"What truly elevates Together AI to ClusterMax™ Gold is their exceptional support and technical expertise. Together AI’s team, led by Tri Dao — the inventor of FlashAttention — and their Together Kernel Collection (TKC), significantly boost customer performance. We don’t believe the value created by Together AI can be replicated elsewhere without cloning Tri Dao.”

— Dylan Patel, Chief Analyst, SemiAnalysis

Powering reasoning models and AI agents

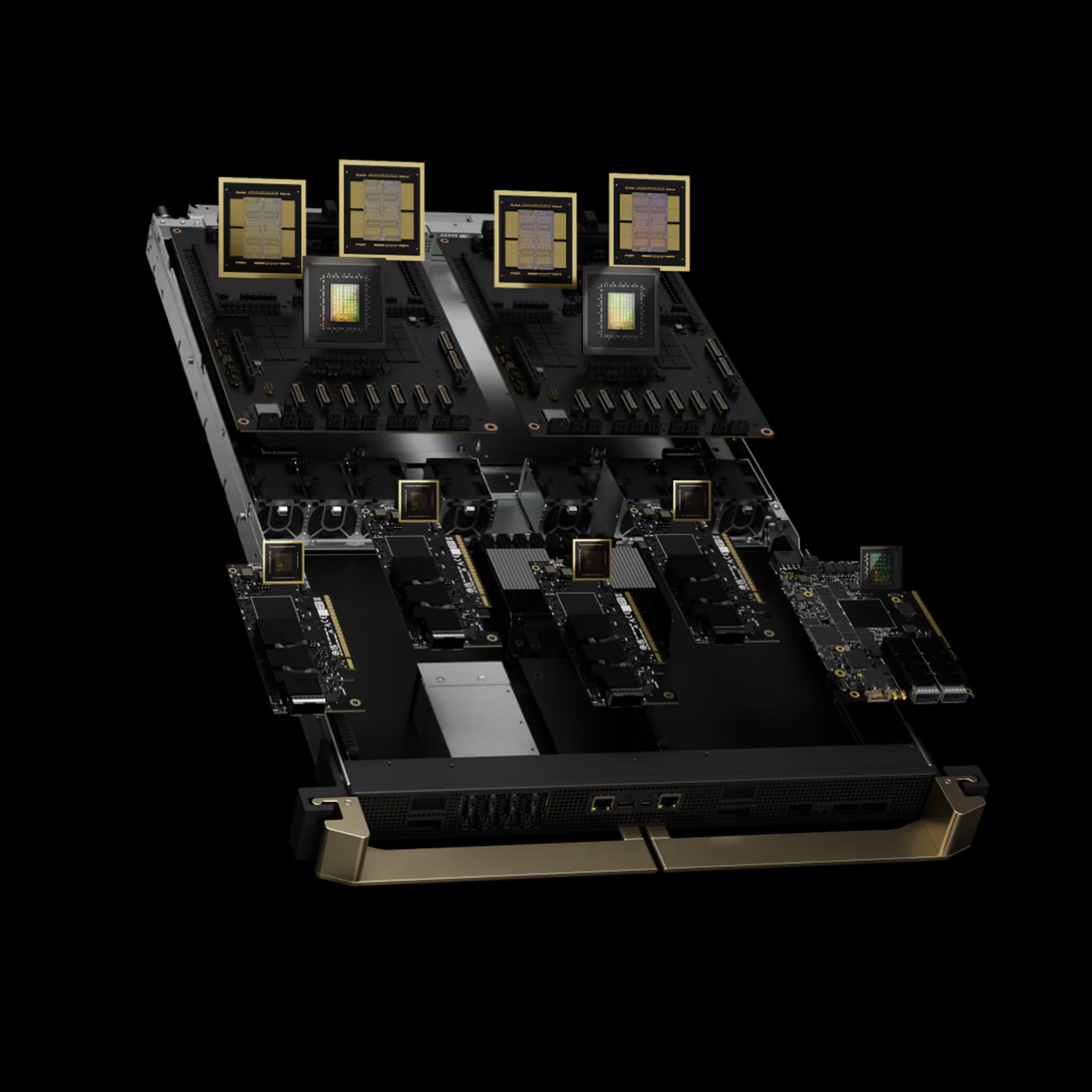

The NVIDIA GB200 NVL72 is an exascale computer in a single rack:

unlocking training and inference of frontier trillion-parameter models.

SOTA GPU Compute

Rack scale powerhouse: 72 Blackwell GPUs and 36 Grace CPUs are fused by NVLink in a liquid cooled chassis for exceptional bandwidth and thermals.

Unified GPU abstraction: The system appears as one colossal GPU, enabling real-time, trillion parameter LLM training and inference with minimal orchestration overhead.

GB200 Grace Blackwell Superchip building block: Each module joins 2 Blackwell GPUs to 1 Grace CPU via NVLink-C2C, delivering memory-coherent, ultra low latency compute.

LLM TRAINING SPEED RELATIVE TO H100

LLM INFERENCE SPEED RELATIVE TO H100

ENERGY EFFICIENCY RELATIVE TO H100

Technical Specs

NVIDIA GB200 NVL72

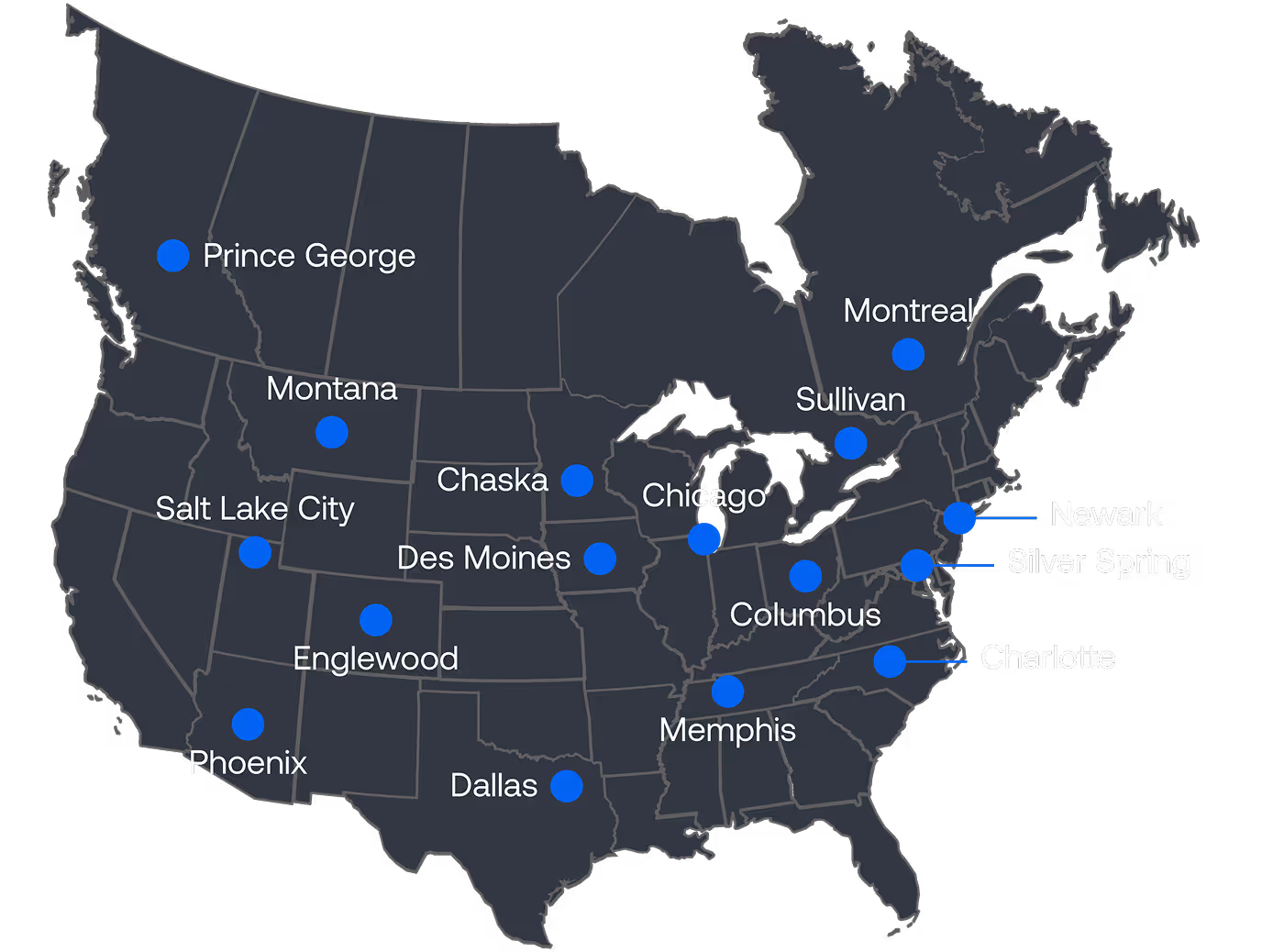

AI Data Centers and Power across North America

Data Center Portfolio

2GW+ in the Portfolio with 600MW of near-term Capacity.

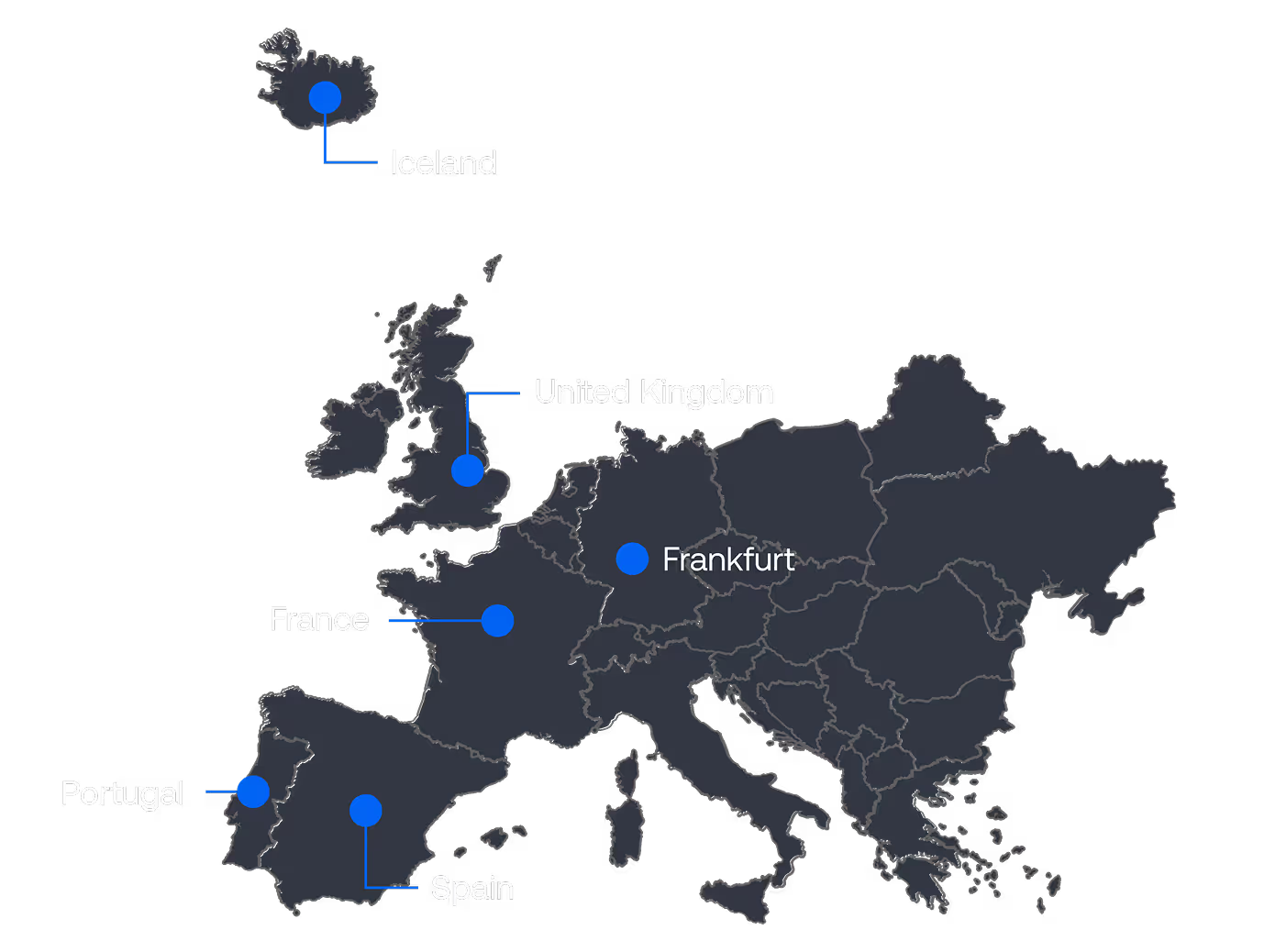

Expansion Capability in Europe and Beyond

Data Center Portfolio

150MW+ available in Europe: UK, Spain, France, Portugal, and Iceland also.

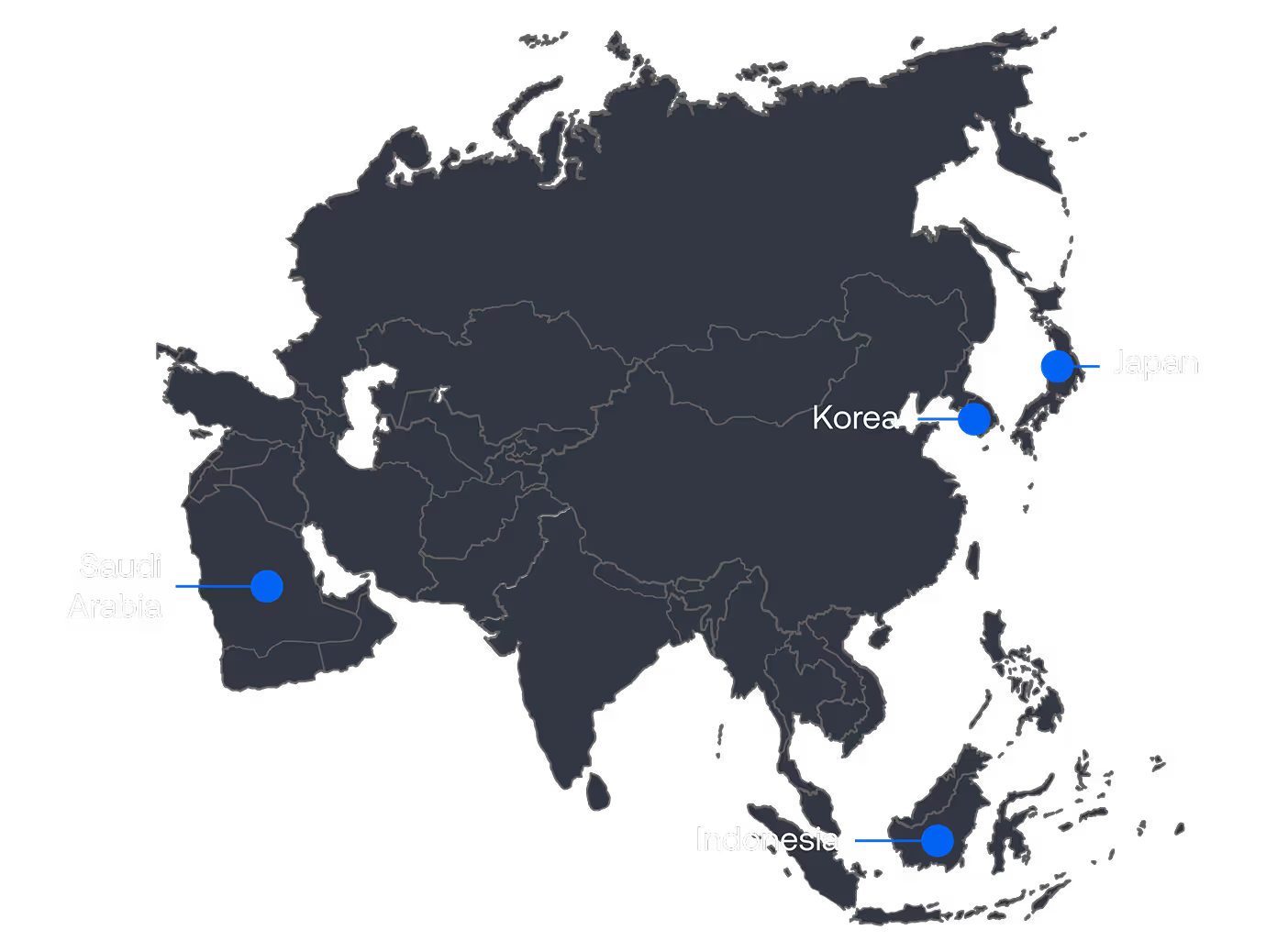

Next Frontiers – Asia and the Middle East

Data Center Portfolio

Options available based on the scale of the projects in Asia and the Middle East.

Powering AI Pioneers

Leading AI companies are ramping up with NVIDIA Blackwell running on Together AI.

Zoom partnered with Together AI to leverage our research and deliver accelerated performance when training the models powering various Zoom AI Companion features.

With Together GPU Clusters accelerated by NVIDIA HGX B200 Zoom, experienced a 1.9X improvement in training speeds out-of-the-box over previous generation NVIDIA Hopper GPUs.

Salesforce leverages Together AI for the entire AI journey: from training, to fine-tuning to inference of their models to deliver Agentforce.

Training a Mistral-24B model, Salesforce saw a 2x improvement in training speeds upgrading from NVIDIA HGX H200 to HGX B200. This is accelerating how Salesforce trains models and integrates research results into Agentforce.

During initial tests with the NVIDIA HGX B200, InVideo immediately saw a 25% improvement when running a training job from NVIDIA HGX H200.

Then, in partnership with our researchers, the team made further optimizations and more than doubled this improvement – making the step up to the NVIDIA Blackwell platform even more appealing.

Our latest research & content

Learn more about running turbocharged NVIDIA GB200 NVL72 GPU clusters on Together AI.

.avif)