Build what’s next

on the AI Native Cloud

Full-stack AI platform, powered by cutting-edge research.

Full-stack cloud

Powering every step of the AI development journey —from experimentation to massive scale.

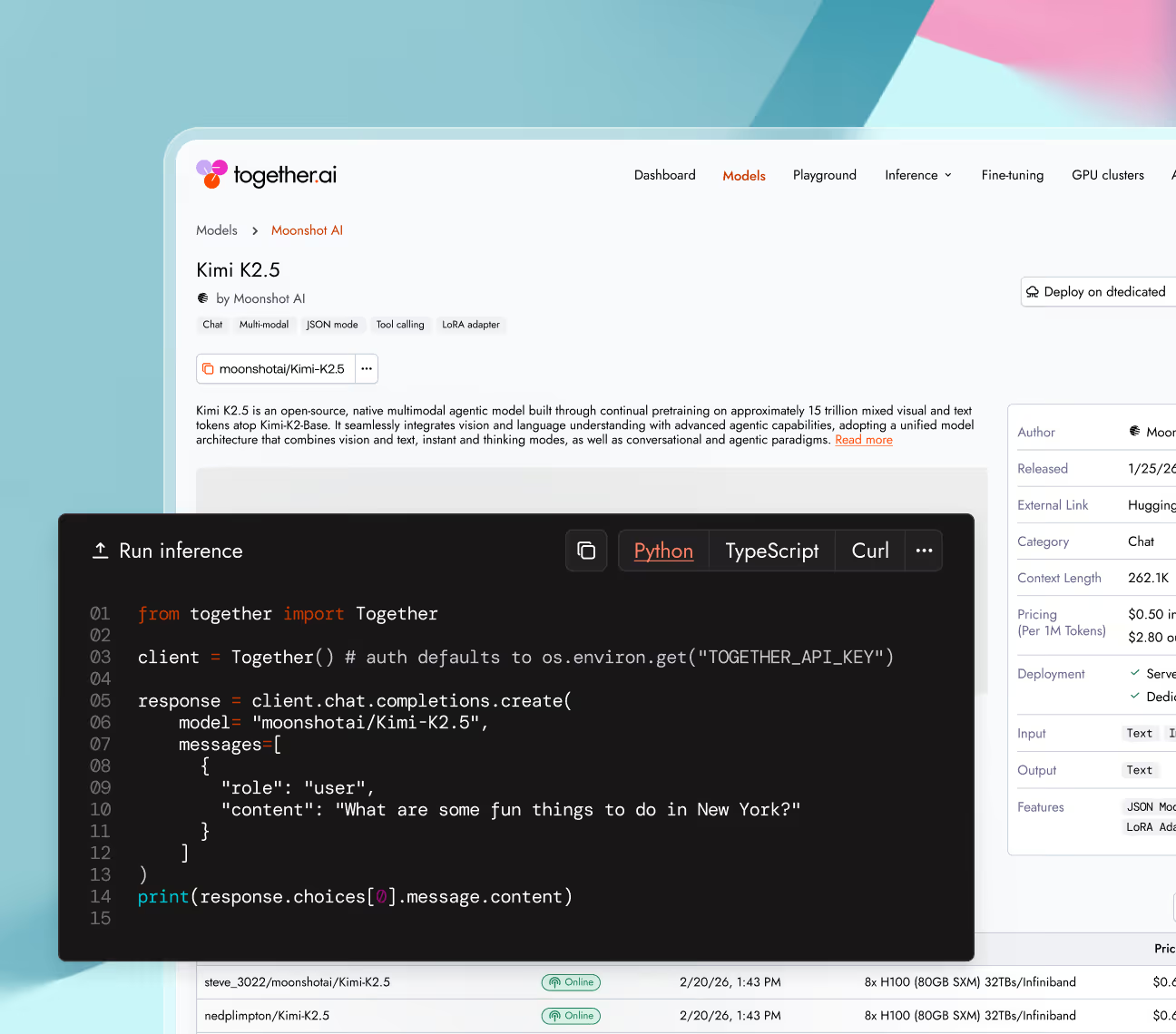

The fastest way to run open-source models on demand. Powered by cutting-edge inference research. No infrastructure to manage, no long-term commitments.

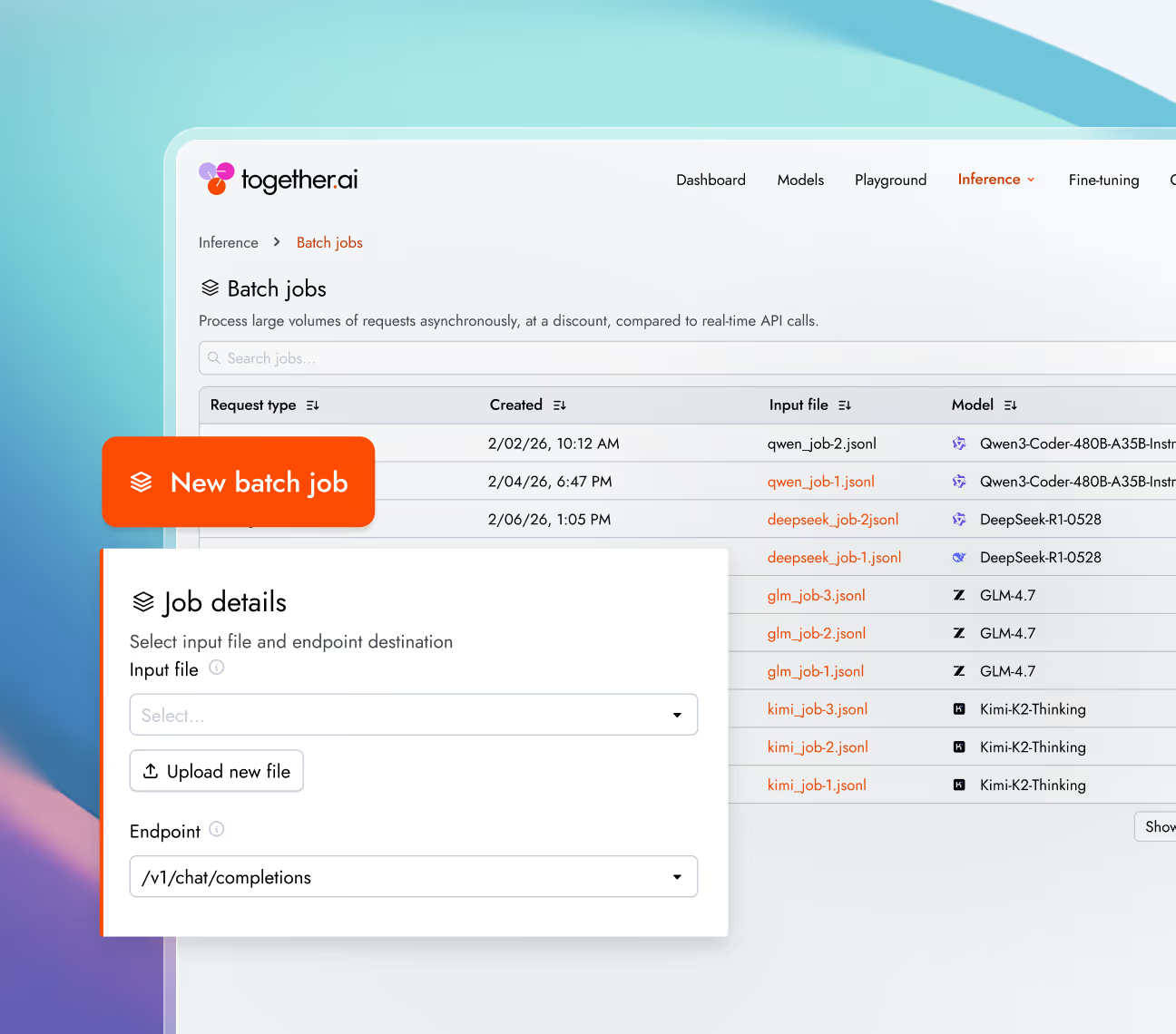

Cost-effectively process massive workloads asynchronously. Scale to 30 billion tokens per model with any serverless model or private deployment.

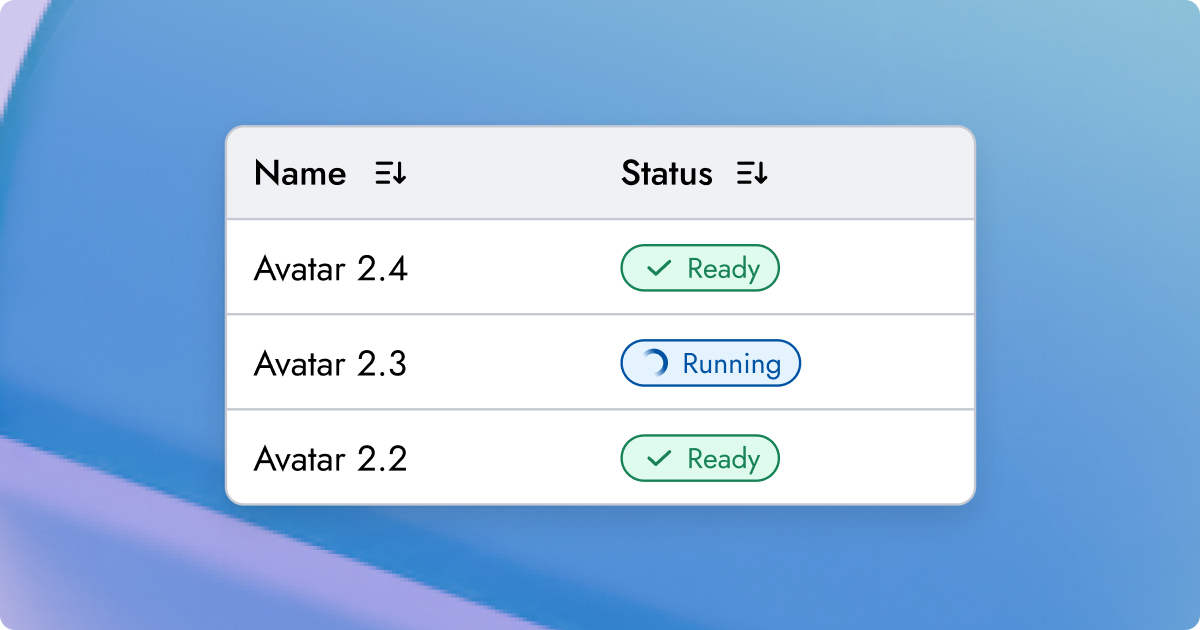

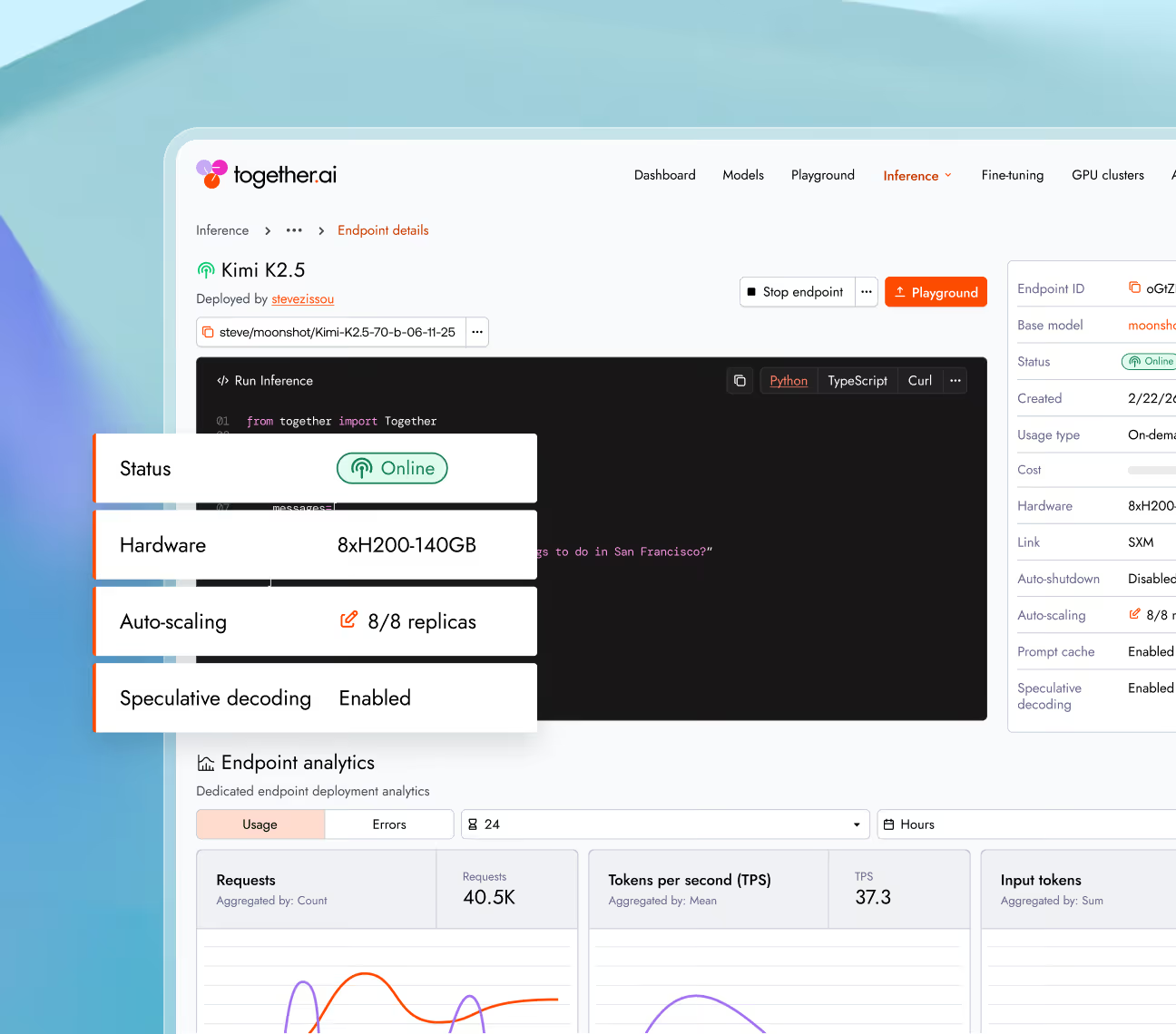

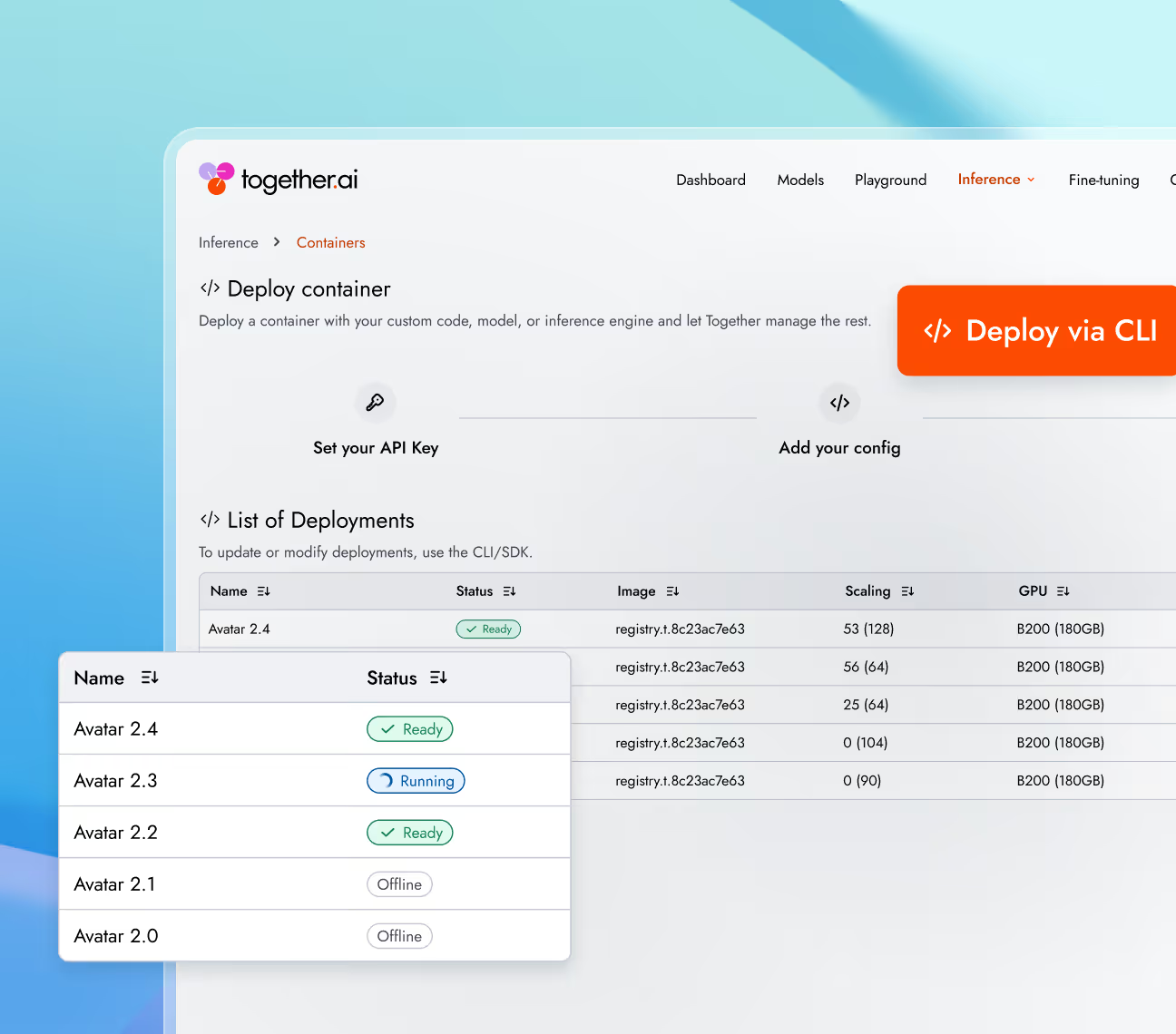

Deploy models on dedicated infrastructure. Purpose-built for teams who need speed, control, and the best economics in the market.

GPU infrastructure purpose-built for generative media workloads. Deploy video, audio, and image models with performance acceleration powered by Together Research.

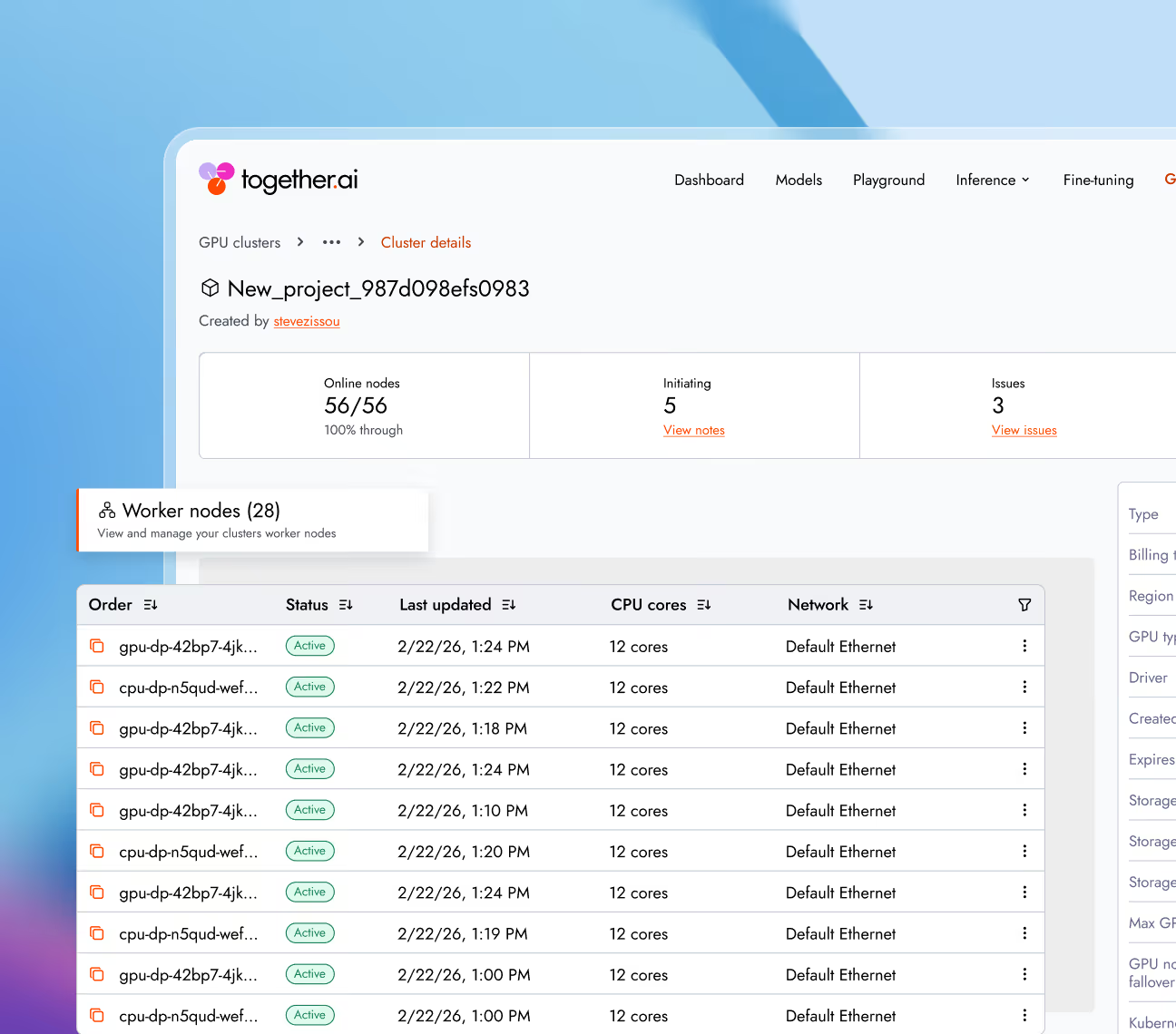

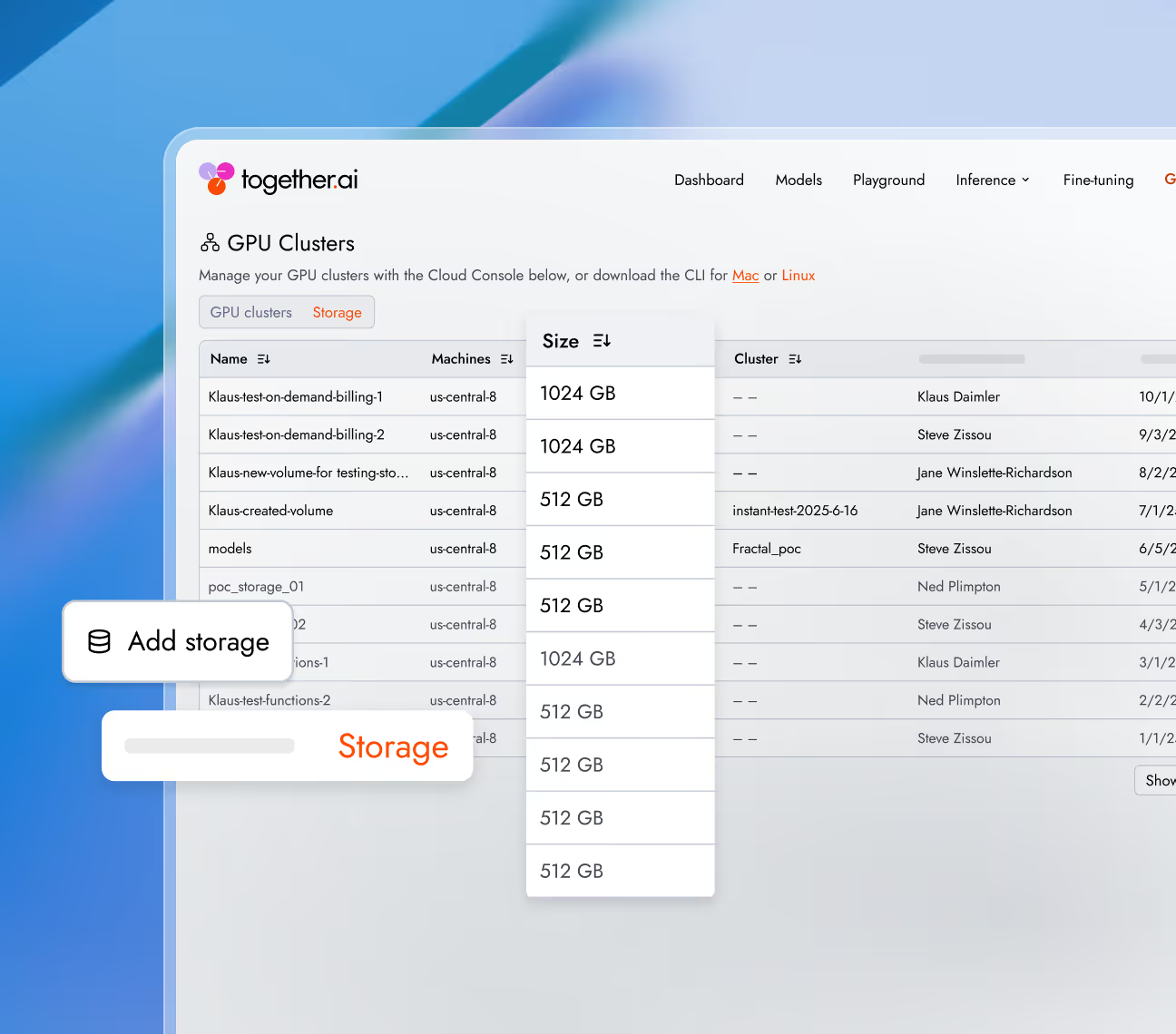

Scale from self-serve instant clusters to thousands of GPUs, all optimized for better performance with Together Kernel Collection.

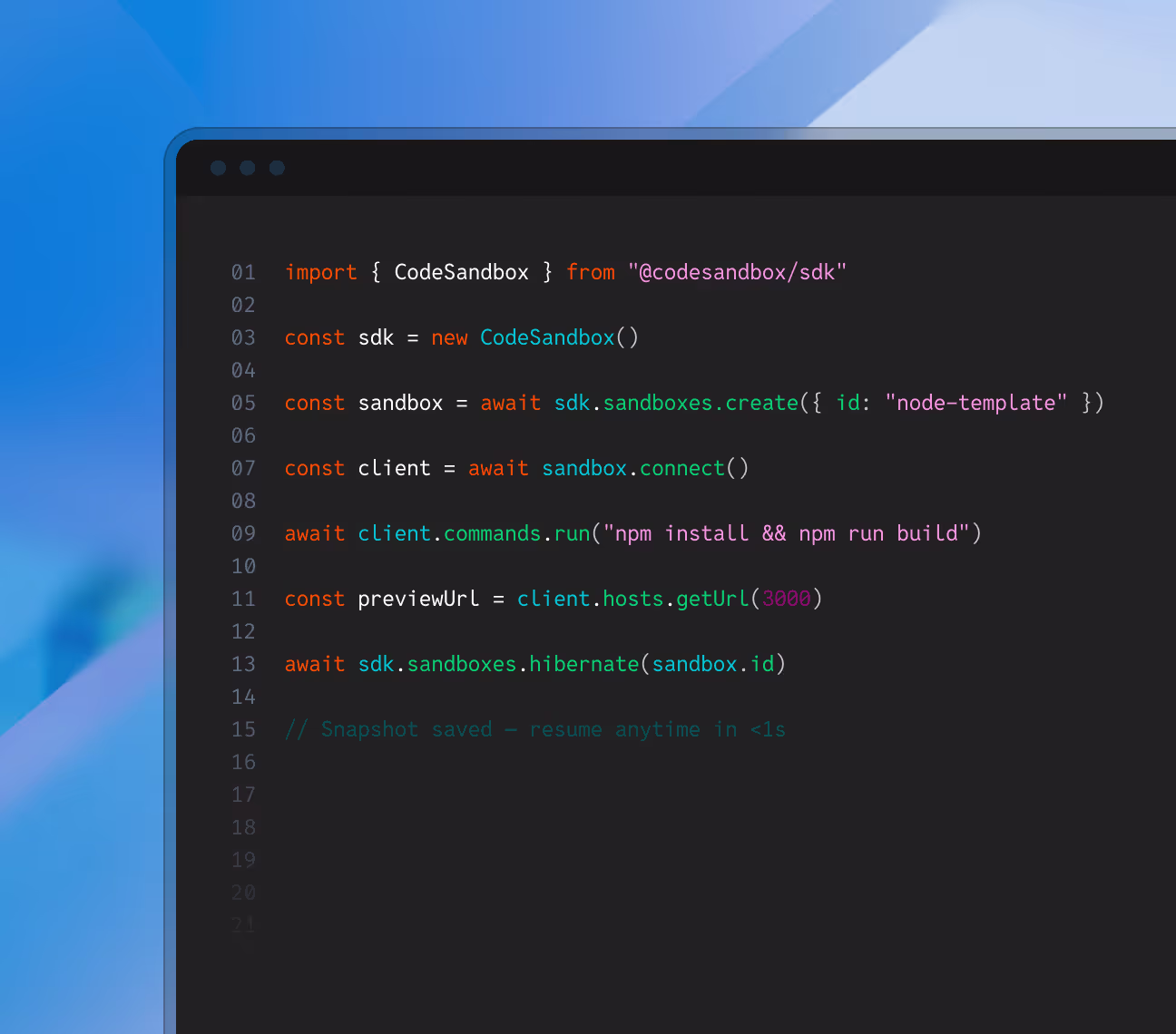

Use fast, secure code sandboxes at scale to set up full-scale development environments for AI apps and agents.

High-performance managed storage for AI-native workloads. Object storage and parallel filesystems optimized for AI, with zero egress fees.

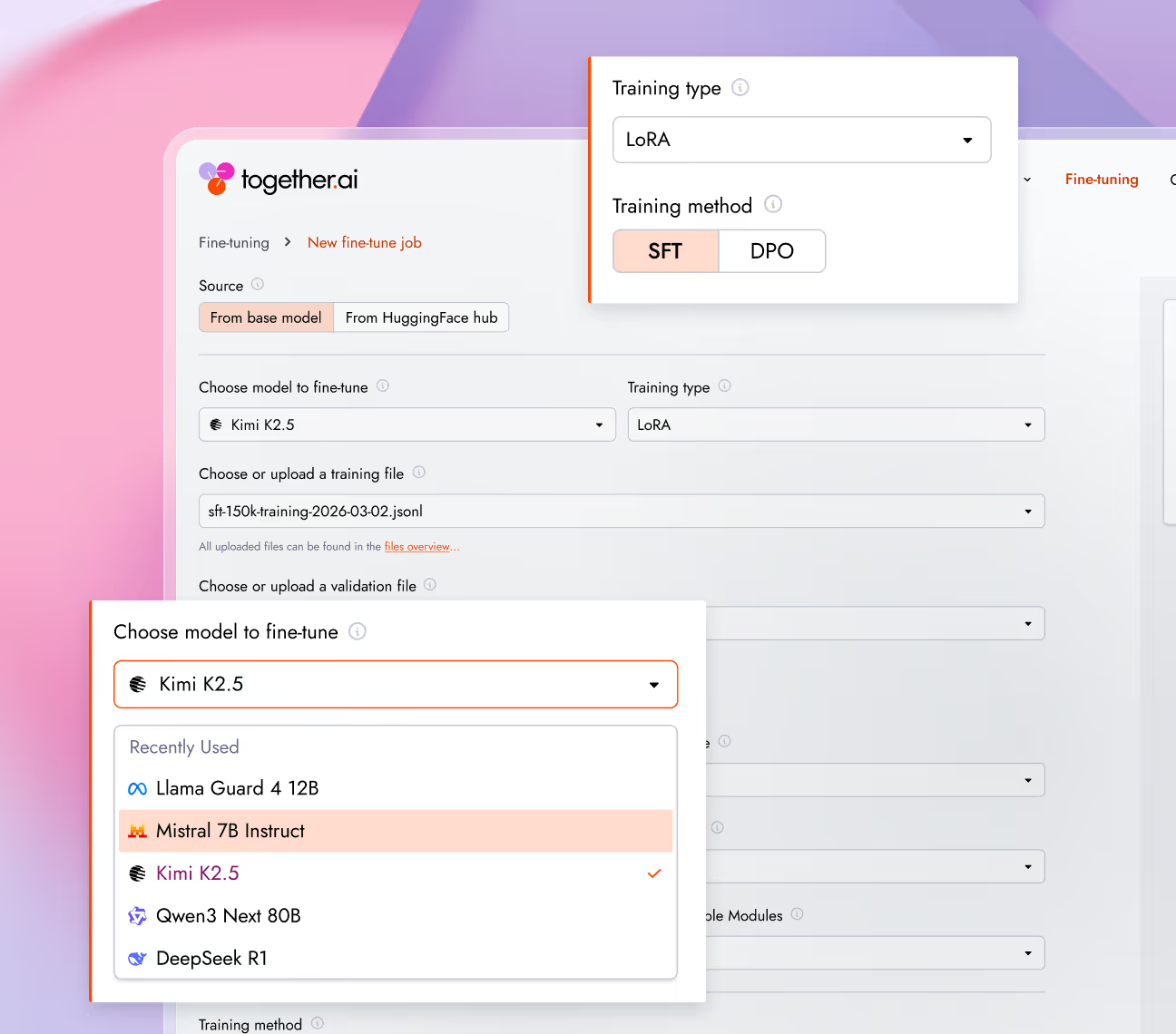

Fine-tune open-source models for production workloads, using the latest research techniques. Improve accuracy, reduce hallucinations, and control behavior — without managing training infrastructure.

Grounded in cutting-edge research

Foundational systems research for production AI.

recognized by

AI natives build on Together AI

See how Together AI powers customers building the next generation of AI products.