From AWS to Together Dedicated Endpoints: Arcee AI's journey to greater inference flexibility

95%

faster Time To First Token

41+

queries per second

7+ models

Deployed

Executive Summary

If you've ever felt overwhelmed by the complexity of today's AI landscape, you're not alone. Amid this entropy, Arcee AI saw an opportunity to simplify AI adoption by creating efficient, smaller language models that help enterprises effortlessly integrate advanced AI workflows.

In this customer story, we explore why Arcee AI transitioned its specialized small language models (SLMs) from AWS to Together Dedicated Endpoints—and how this migration unlocked significant improvements in cost, performance, and operational agility.

Training Small Language Models

At the core of Arcee AI’s strategy is a focus on training specialized small language models (SLMs)—typically under 72 billion parameters—optimized for specific tasks.

Leveraging their proprietary training stack—including specialized techniques for merging and distilling models—Arcee AI consistently produces high-performing models. These custom models, both open-source and proprietary, excel in distinct tasks such as coding, general text generation, and high-speed inference, providing precise and cost-efficient performance.

We’re thrilled to announce that as of today, seven of these models are now available on Together AI serverless endpoints, so you can start using them with the Together API:

- Arcee AI Virtuoso-Large: Powerful 72B SLM, built for complex, cross-domain tasks and scalable enterprise-grade AI solutions.

- Arcee AI Virtuoso-Medium: Versatile 32B SLM built for precision and adaptability across domains, ideal for dynamic, compute-intensive use cases.

- Arcee AI Maestro: 32B SLM for advanced reasoning, excelling in complex problem-solving, abstract reasoning, and scenario modeling.

- Arcee AI Coder-Large: 32B model based on Qwen2.5-Instruct, fine-tuned for code generation and debugging, ideal for advanced development tasks.

- Arcee AI Caller: 32B SLM optimized for tool use and API calls, enabling precise execution and orchestration in automation pipelines.

- Arcee AI Spotlight: 7B vision-language model based on Qwen2.5-VL, refined by Arcee AI for visual tasks with a 32K context length for rich interaction.

- Arcee AI Blitz: Efficient 24B SLM with strong world knowledge, offering fast, affordable performance across diverse tasks.

Arcee Conductor & Arcee Orchestra

After an initial focus on developing powerful models, Arcee AI built a software layer on top, currently consisting of two products: Arcee Conductor and Arcee Orchestra.

Arcee Conductor is an intelligent inference routing system powered by a unique 150 million parameter classifier—such a small size that latency doesn’t come into play. Conductor was trained from scratch to evaluate each query or prompt, then quickly route it to the most suitable model based on requirements that include complexity, domain, and task type.

Once a user enters a query, Conductor intelligently routes it to the best model for the specific use case, picking between one of Arcee AI’s suite of specialized models or a state-of-the-art third-party model such as GPT-4.1, Claude 3.7 Sonnet, and DeepSeek-R1.

“Most of the time, you think you need a GPT-4.1 caliber model, whereas for a large number of queries you don’t need that level of depth. So Conductor will route to one of our models, which are 95% cheaper than GPT and Sonnet.” – Mark McQuade, CEO

In addition to the drastic cost reduction, Arcee AI also found that using Conductor improved performance across an entire benchmark suite, since it quickly routes to the best model for each specific task. Additionally, it allows customers to bring their own models and optimize the Conductor configuration to optimize a specific KPI (such as latency or cost),

Arcee Orchestra focuses on building agentic workflows. It enables enterprises to automate tasks through seamless integration with third-party services and data sources. Orchestra stands out with its intuitive no-code interface, enhanced by AI-driven workflow generation capabilities, allowing users to effortlessly build automated workflows through simple prompts or voice commands. Orchestra users can opt to power their workflows with a third-party model, or with one of the Arcee SLMs that has been specially-trained for agentic workflows–via careful fine-tuning to be highly capable at instruction-following and function calling, and to understand the nuances of API inputs and outputs.

“Orchestra allows you to automate complex tasks easily, creating workflows using a drag-and-drop interface. It gets really powerful once you start prompting it with a query or voice input to generate a workflow.” – Mark McQuade, CEO

Operational challenges: costs and complexity

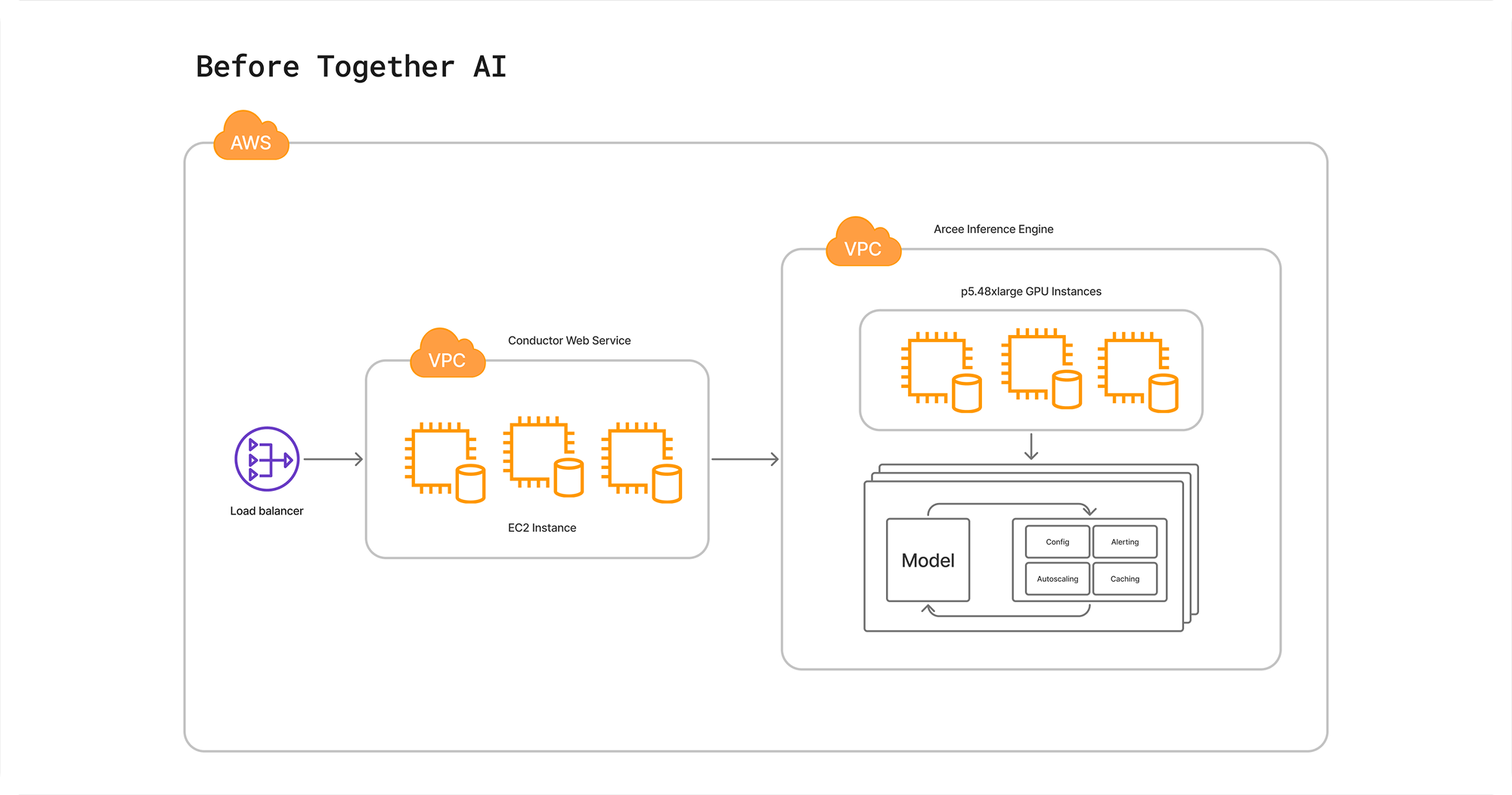

Arcee AI’s models are at the core of its product offering. Originally, Arcee AI deployed these models through AWS's managed Kubernetes service (EKS).

Despite being a managed service, this AWS setup presented significant challenges. For one, managing it required significant engineering resources, specialized Kubernetes expertise and dedicated engineering talent. Plus, the team found that self-managed auto scaling of GPUs was very challenging. Ultimately, this siphoned off valuable resources from product innovation and development.

“Kubernetes is not simple. You have to hire dedicated engineering talent to manage the fleet and the backend infrastructure–not just the compute, but also having the compute shared across nodes, load balancing, autoscaling, and all these fun things that require multiple engineers to manage. Even though EKS is a managed service, it was still a very manual, cumbersome and time-consuming process.” – Mark McQuade, CEO

At this stage of the business, Arcee AI considered continuing to staff a team of engineers to manage and grow their AWS GPU deployment. However, the team found that AWS GPUs were difficult to procure. On-demand pricing for H100 GPUs was prohibitively expensive at $12+/hour, and getting that price down to a more manageable level required a multi-year commitment with “always on” GPUs. This forced Arcee AI to reassess output value for the amount invested across infrastructure, staffing resources, and time.

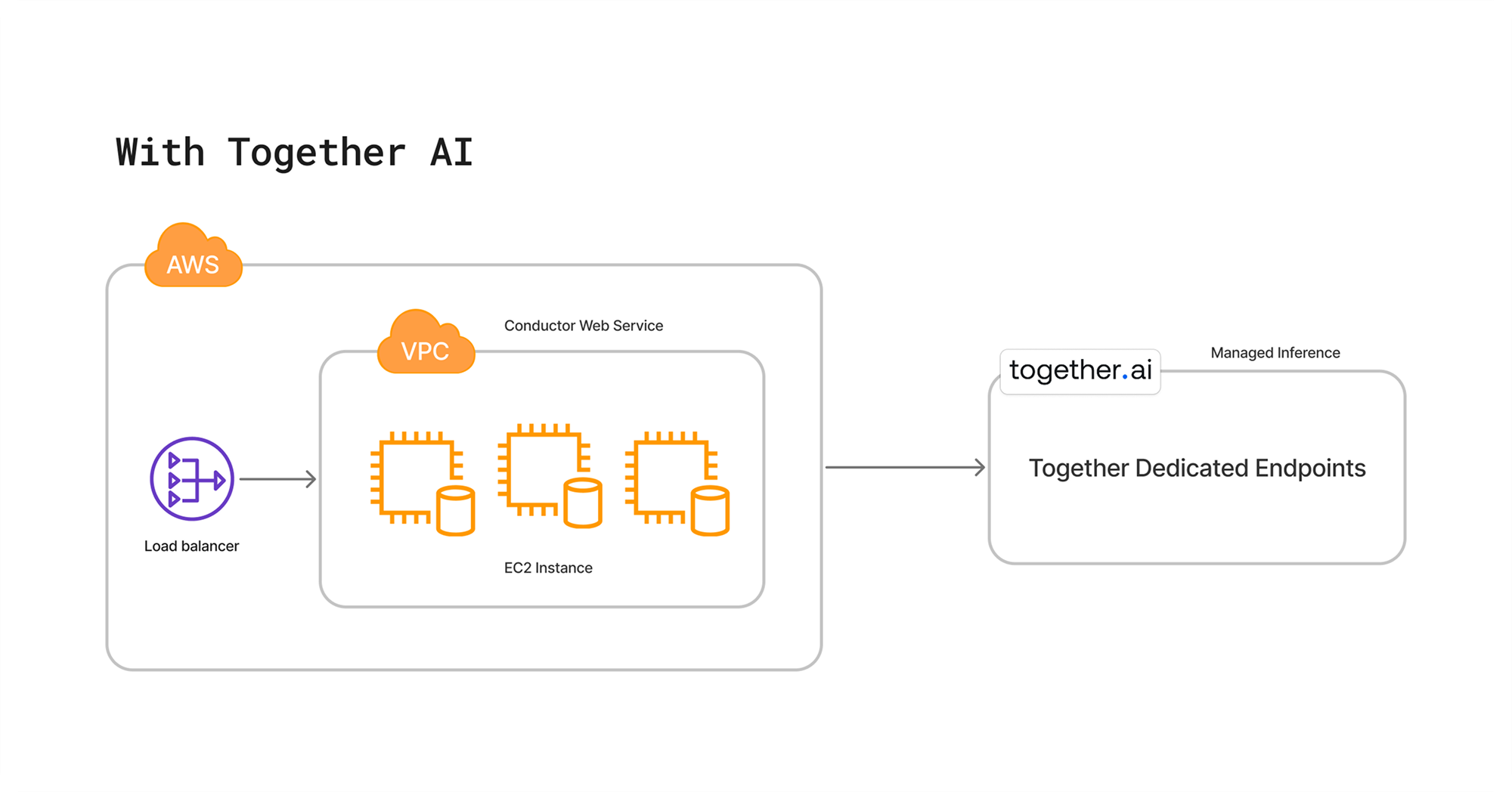

Moving to Together AI: flexibility and expertise

Together Dedicated Endpoints offered Arcee AI a truly managed GPU deployment—eliminating the need for in-house infrastructure work while delivering greater flexibility and a more price-competitive solution. These advantages led the team to migrate their inference workloads to Together AI.

“The migration was a very simple process. We put our models in a private Hugging Face repository, the Together AI team pulled them down and handed us the API, and we just plugged that into our app. That’s what we wanted–a fully managed experience. Everything has been great: full availability, no downtime.” – Mark McQuade, CEO

As an immediate result, Arcee AI simplified a critical part of its infrastructure overnight: everything that was handled via EKS, including the infrastructure management, was offloaded to Together AI.

An additional benefit experienced by Arcee AI as part of the move included performance improvements across all models:

- Throughput (QPS): Achieved 41+ queries per second at 32 concurrent requests, surpassing Arcee AI's requirements.

- Time to First Token (TTFT): Improved by up to 95%, reducing latency of some models from 485ms on AWS to just 29ms on Together Dedicated Endpoints, exceeding expectations.

“We researched several leading inference providers but ultimately chose to go with Together AI because price-performance was significantly better.” – Mark McQuade, CEO

Future developments

We’re excited to continue our partnership with Arcee AI as they continue to simplify the entropic world of generative AI with additional Arcee Orchestra integrations, adding specialized modes to Arcee Conductor for tool-calling and coding, and deeper model customization through hybrid architectures.

Together AI remains committed to continuously optimizing our GPU infrastructure, enabling Arcee AI to scale effortlessly on Together Dedicated Endpoints with superior performance, flexibility, and cost-efficiency.

“We’re proud to partner with innovators like Arcee AI, who are reshaping efficiency in generative AI. By hosting their small, specialized models on Together AI, we’re helping developers achieve better ROI across different modalities—paired with the industry-leading performance of our serverless endpoints. We’re excited to keep building on this partnership and exploring new opportunities together.” — Vipul Ved Prakash, Founder and CEO of Together AI

As part of our partnership, we will continue to make Arcee AI’s models available to our community through our serverless models offering, to ensure that everyone can access these highly-optimized models with a flexible pricing structure. Find these models in our model library to start building today!

Use case details

Products used

Highlights

- 95% faster TTFT

- 41+ QPS

- GPU fleet offloaded

- Zero downtime

Use case

Dedicated inference for SLMs

Company segment

AI-Native Startup