Performance and security at scale

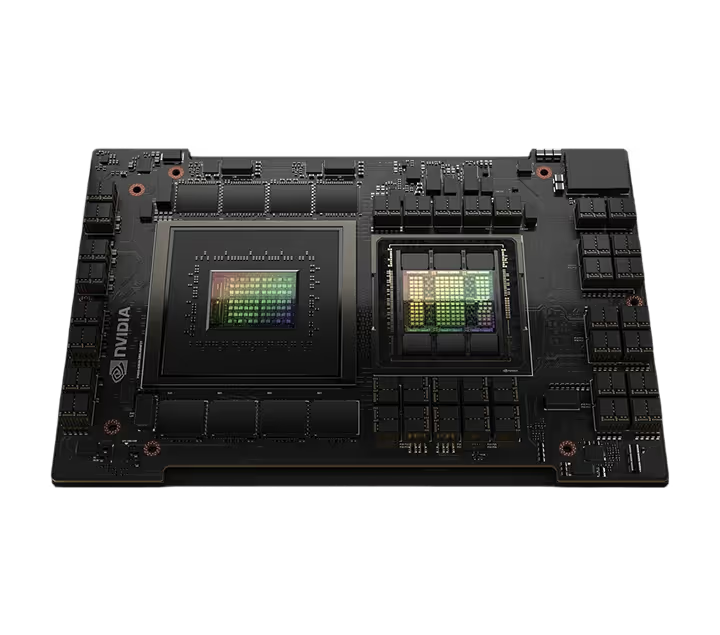

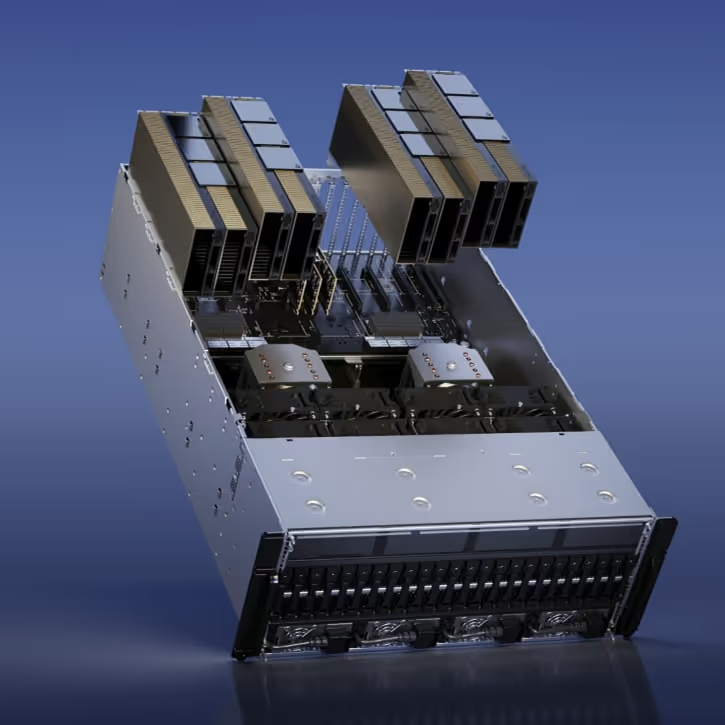

NVIDIA H100 GPUs deliver exceptional performance, scalability, and security for training, HPC workloads & inference.

Why NVIDIA H100 on Together GPU Clusters?

Extraordinary AI infrastructure. Delivered rapidly. Optimized precisely.

Efficient training with Hopper GPUs

Each H100 cluster leverages fourth-generation Tensor Cores and the Transformer Engine with FP8 precision, enabling fast training for GPT-scale models.

Advanced multi-GPU connectivity

We deploy NVIDIA’s NVLink Switch System for 900GB/s bidirectional bandwidth per GPU, providing unparalleled scalability for multi-node AI and HPC workloads.

Secure, multi-instance GPU configurations

Second-generation MIG technology securely partitions GPUs into isolated instances, maximizing resource utilization and quality of service across diverse teams.

Rapid deployment at data-center scale

Together delivers NVIDIA H100 clusters from hundreds to thousands of GPUs, integrated seamlessly with your choice of orchestration platforms like Kubernetes, Slurm, or Ray.

Ready in weeks, no long waits

Deploy large-scale NVIDIA H100 infrastructure swiftly, without supply delays. We ensure clusters are operational quickly, accelerating your AI projects immediately.

Run by researchers who train models

Our research team actively runs and tunes training workloads on NVIDIA H100 systems. You're not just getting hardware — you’re working with experts at the edge of what's possible.

"What truly elevates Together AI to ClusterMax™ Gold is their exceptional support and technical expertise. Together AI’s team, led by Tri Dao — the inventor of FlashAttention — and their Together Kernel Collection (TKC), significantly boost customer performance. We don’t believe the value created by Together AI can be replicated elsewhere without cloning Tri Dao.”

— Dylan Patel, Chief Analyst, SemiAnalysis

Powering generative AI and HPC workloads

NVIDIA H100 GPUs, powered by the revolutionary Hopper architecture, deliver transformative performance for AI, HPC, and data analytics at scale.

Accelerated Compute

Optimized AI training: Featuring Hopper Tensor Cores and Transformer Engine technology, the H100 accelerates transformer-based model training with exceptional efficiency.

NVLink scalability: NVIDIA’s advanced NVLink Switch System delivers superior multi-GPU connectivity, supporting ultra-high bandwidth and low-latency communication.

Enterprise-grade security: Together with NVIDIA, we ensure data and application integrity, providing hardware-based protection for sensitive workloads.

LLM TRAINING SPEED RELATIVE TO A100

INFERENCE PERFORMANCE ON MEGATRON 530B

HPC PERFORMANCE

Technical Specs

NVIDIA H100

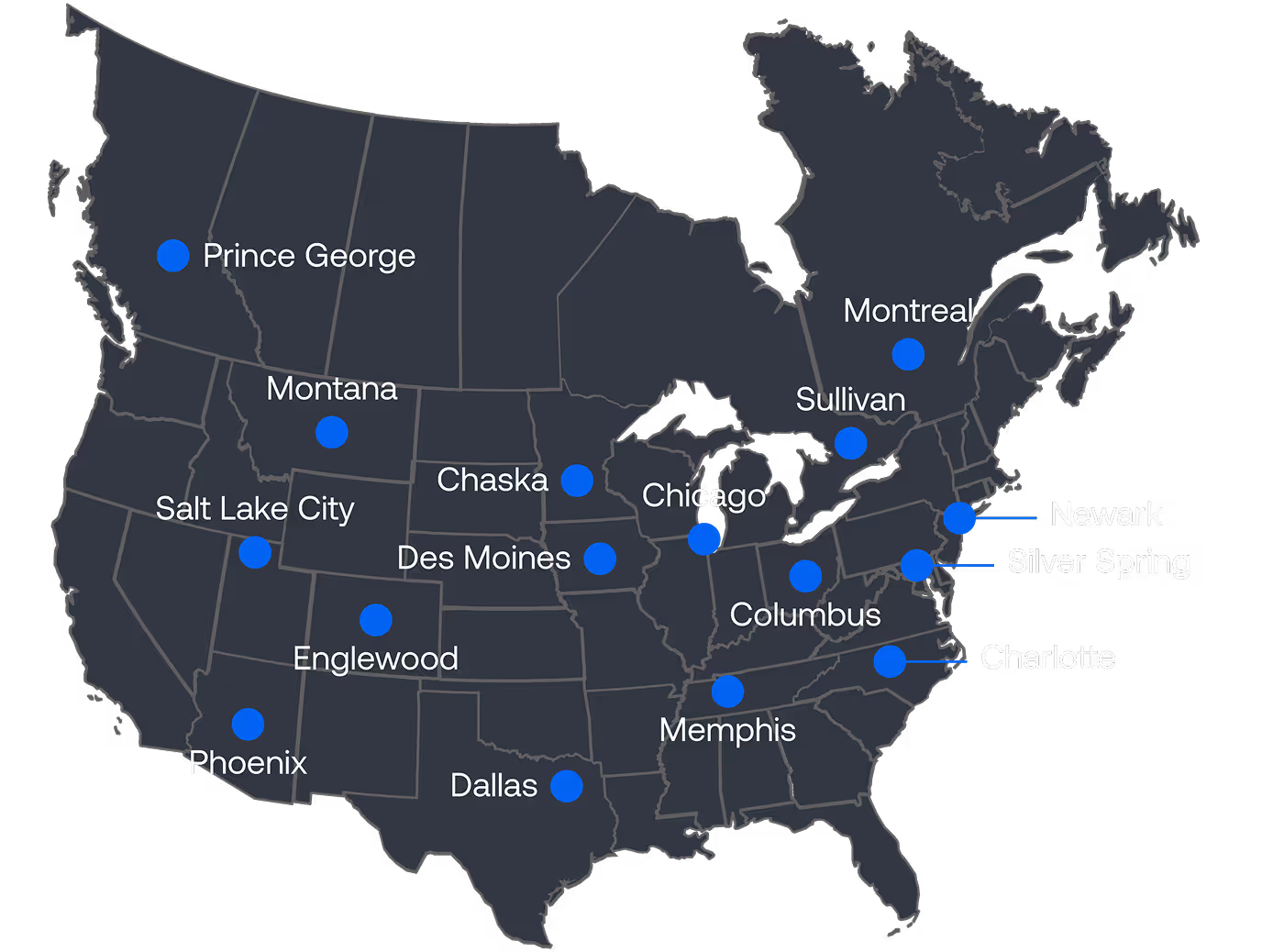

AI Data Centers and Power across North America

Data Center Portfolio

2GW+ in the Portfolio with 600MW of near-term Capacity.

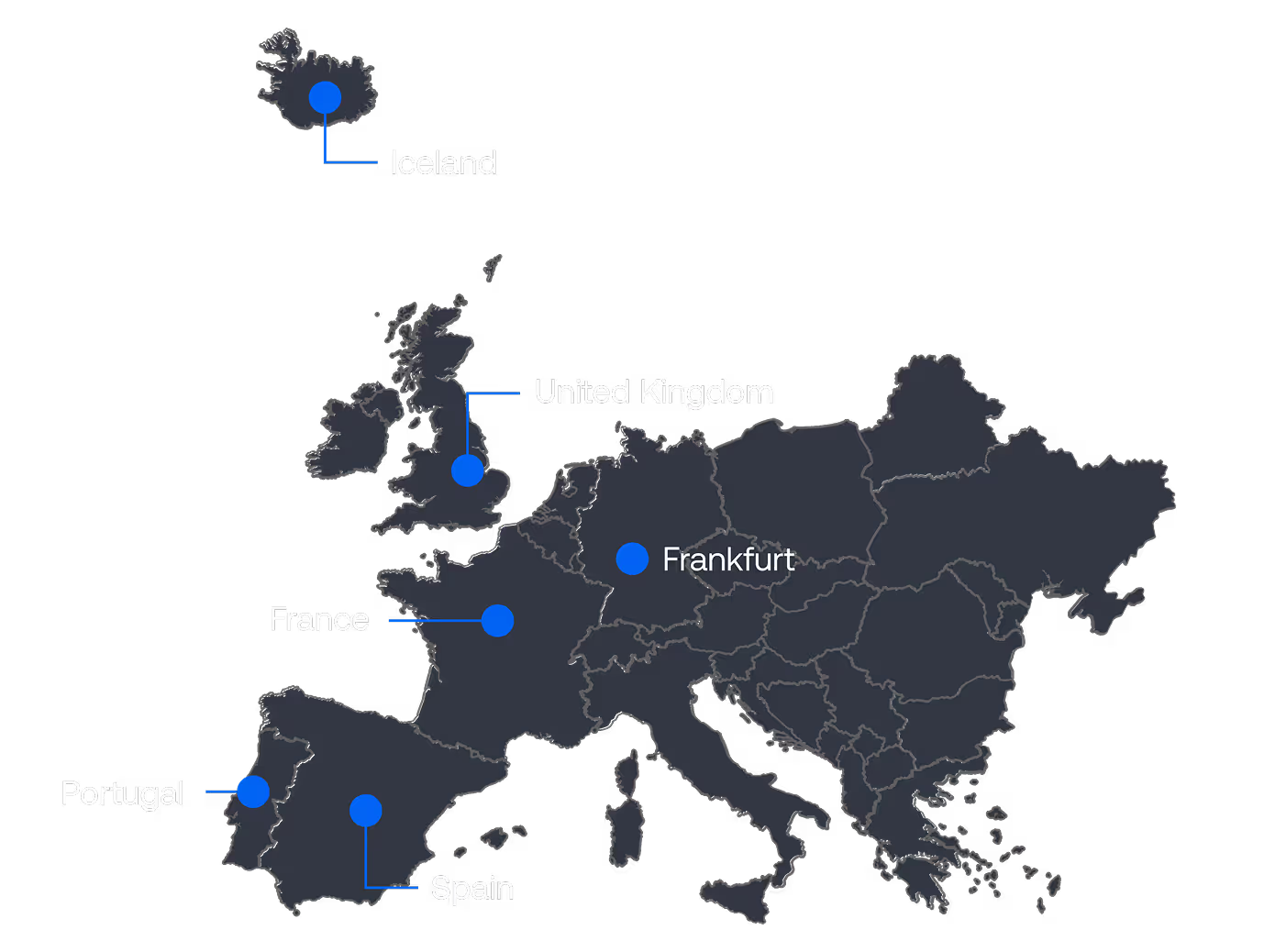

Expansion Capability in Europe and Beyond

Data Center Portfolio

150MW+ available in Europe: UK, Spain, France, Portugal, and Iceland also.

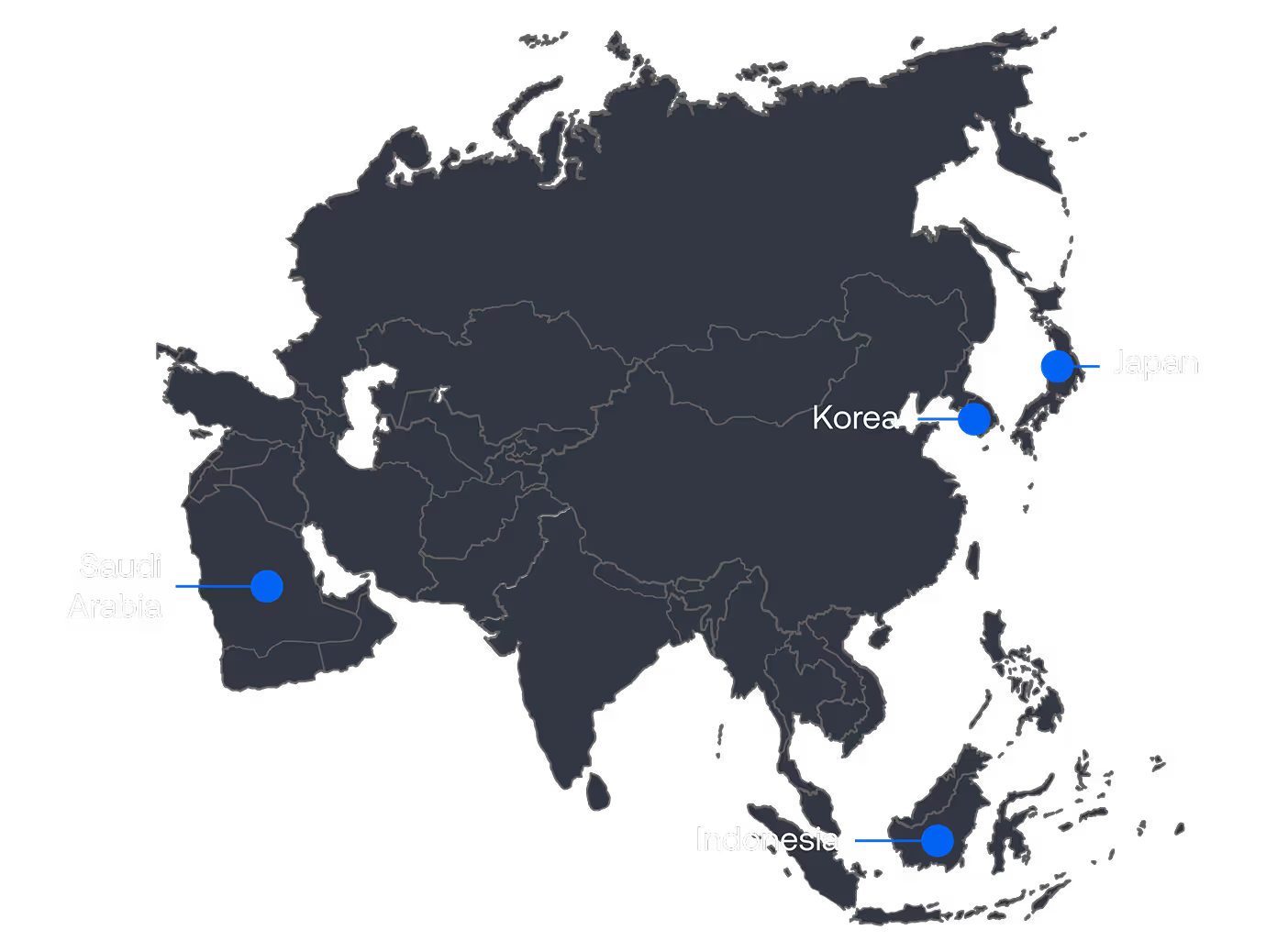

Next Frontiers – Asia and the Middle East

Data Center Portfolio

Options available based on the scale of the projects in Asia and the Middle East.

Powering AI Pioneers

Leading AI companies are ramping up with NVIDIA Blackwell running on Together AI.

Zoom partnered with Together AI to leverage our research and deliver accelerated performance when training the models powering various Zoom AI Companion features.

With Together GPU Clusters accelerated by NVIDIA HGX B200 Zoom, experienced a 1.9X improvement in training speeds out-of-the-box over previous generation NVIDIA Hopper GPUs.

Salesforce leverages Together AI for the entire AI journey: from training, to fine-tuning to inference of their models to deliver Agentforce.

Training a Mistral-24B model, Salesforce saw a 2x improvement in training speeds upgrading from NVIDIA HGX H200 to HGX B200. This is accelerating how Salesforce trains models and integrates research results into Agentforce.

During initial tests with the NVIDIA HGX B200, InVideo immediately saw a 25% improvement when running a training job from NVIDIA HGX H200.

Then, in partnership with our researchers, the team made further optimizations and more than doubled this improvement – making the step up to the NVIDIA Blackwell platform even more appealing.

Our latest research & content

Learn more about running turbocharged NVIDIA GB200 NVL72 GPU clusters on Together AI.

.avif)