Fine-tuning open LLM judges to outperform GPT-5.2

Summary

Open-source LLM judges fine-tuned with DPO can outperform GPT-5.2 at evaluating model outputs. We trained GPT-OSS 120B on 5,400 preference pairs to beat GPT-5.2's accuracy—delivering superior performance at 15x lower cost and 14x faster speeds.

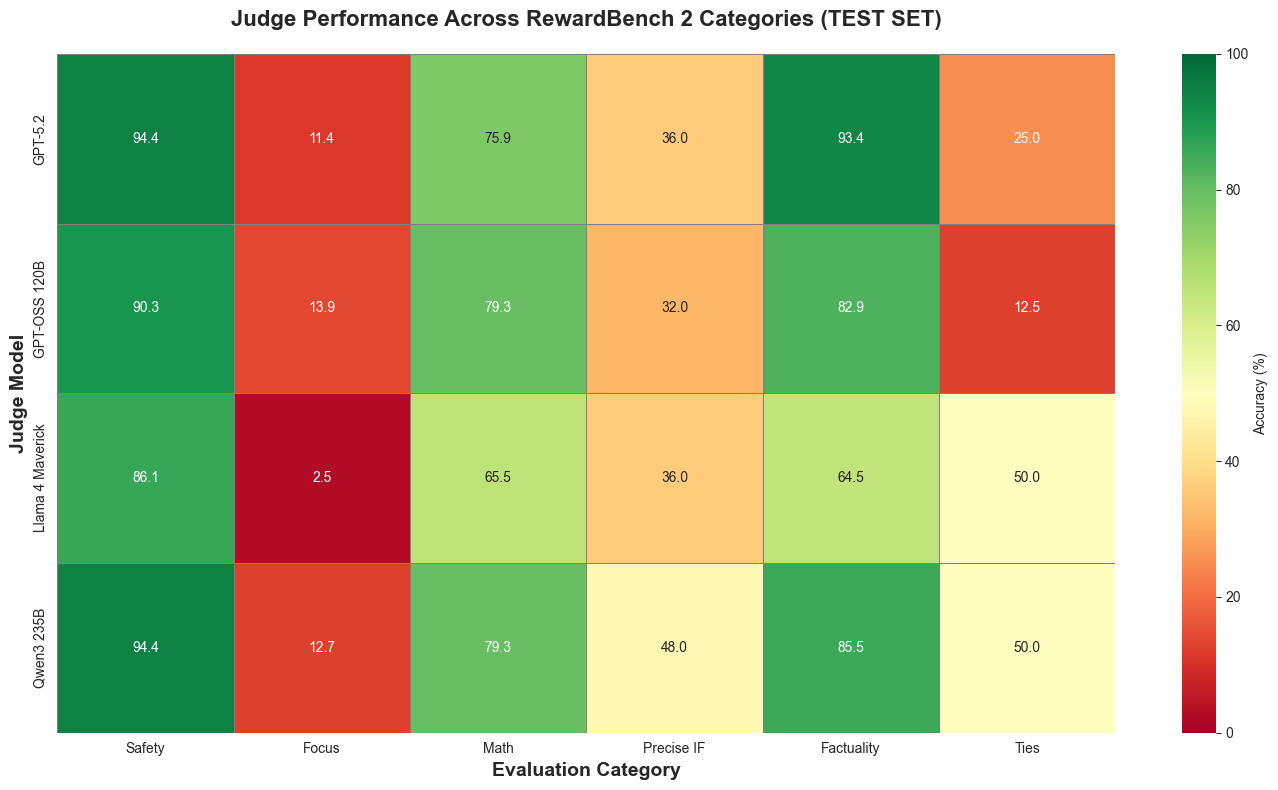

A deep dive into using preference optimization to train open-source models that beat GPT 5.2. We show that fine-tuned open-source models like gpt-oss 120b and Qwen 3 235B Instruct more often agree with human preference labels on a held-out evaluation set. We evaluate using Reward Bench 2 which measures alignment with human judgment, not absolute correctness or ground-truth quality. The table below is a quick sneak preview of the results we got, if you’d rather just see the code please feel free to jump into the cookbook!

The LLM-as-a-judge paradox

Here's a paradox that’s bothered me for some time now: we're using LLMs to evaluate LLMs. The same technology that generates hallucinations is now our primary tool for detecting them. It sounds like asking the fox to guard the henhouse 😀.

But it works. And not just works, it's become the dominant framework for evaluating LLM-powered products at scale.

The reason is simple: for most tasks judging is easier than generating. When an LLM generates a response, it juggles complex context, follows multi-step instructions, and synthesizes information from its training data. When it evaluates a response, it performs a focused classification task of the form: does this text contain harmful content? Is response A better than response B?

This insight opens up an interesting question: if judging is a simpler task, can we fine-tune smaller, open-source models to be *better* judges than massive closed-source alternatives?

We ran the experiment. The answer is yes!

In this deep dive, we'll show you how we fine-tuned open-source LLM judges to outperform GPT-5.2 on human preference alignment using Direct Preference Optimization (DPO). We'll cover:

- The experimental setup and benchmark (RewardBench 2)

- Baseline evaluation of 4 judge models (3 open, 1 closed)

- DPO fine-tuning methodology and results

- Category-level analysis revealing where each model excels and where preference tuning helped/hurt

- Practical code to implement this yourself!

Let's dive in.

Why LLM-as-a-judge works

Before we get to the experiment, let's build intuition for why this technique is so effective.

The evaluation scaling problem

Evaluating LLM outputs is fundamentally different from evaluating traditional ML models. With a classifier, you compute accuracy against ground truth labels. With a recommender, you measure ranking quality with NDCG.

But with generative text? There are many ways to be "right." A summary can be accurate without matching the reference word-for-word. A chatbot response can be helpful in different styles. Metrics like BLEU or ROUGE capture surface-level overlap but miss semantic equivalence.

Human evaluation handles these nuances, but it doesn't scale. You can't have humans review every response in production.

Enter LLM-as-a-judge

The breakthrough insight is that LLMs, trained on vast amounts of human-written text, have internalized patterns of quality, relevance, and appropriateness. By crafting the right evaluation prompt, you can activate these capabilities for focused assessment tasks.

The key is that the evaluator/Judge LLM operates independently of the generation process. It examines the output and judges it on its merits. Even if your chatbot was tricked into generating harmful content, an external evaluator can still detect this because it's performing a simpler, focused classification task.

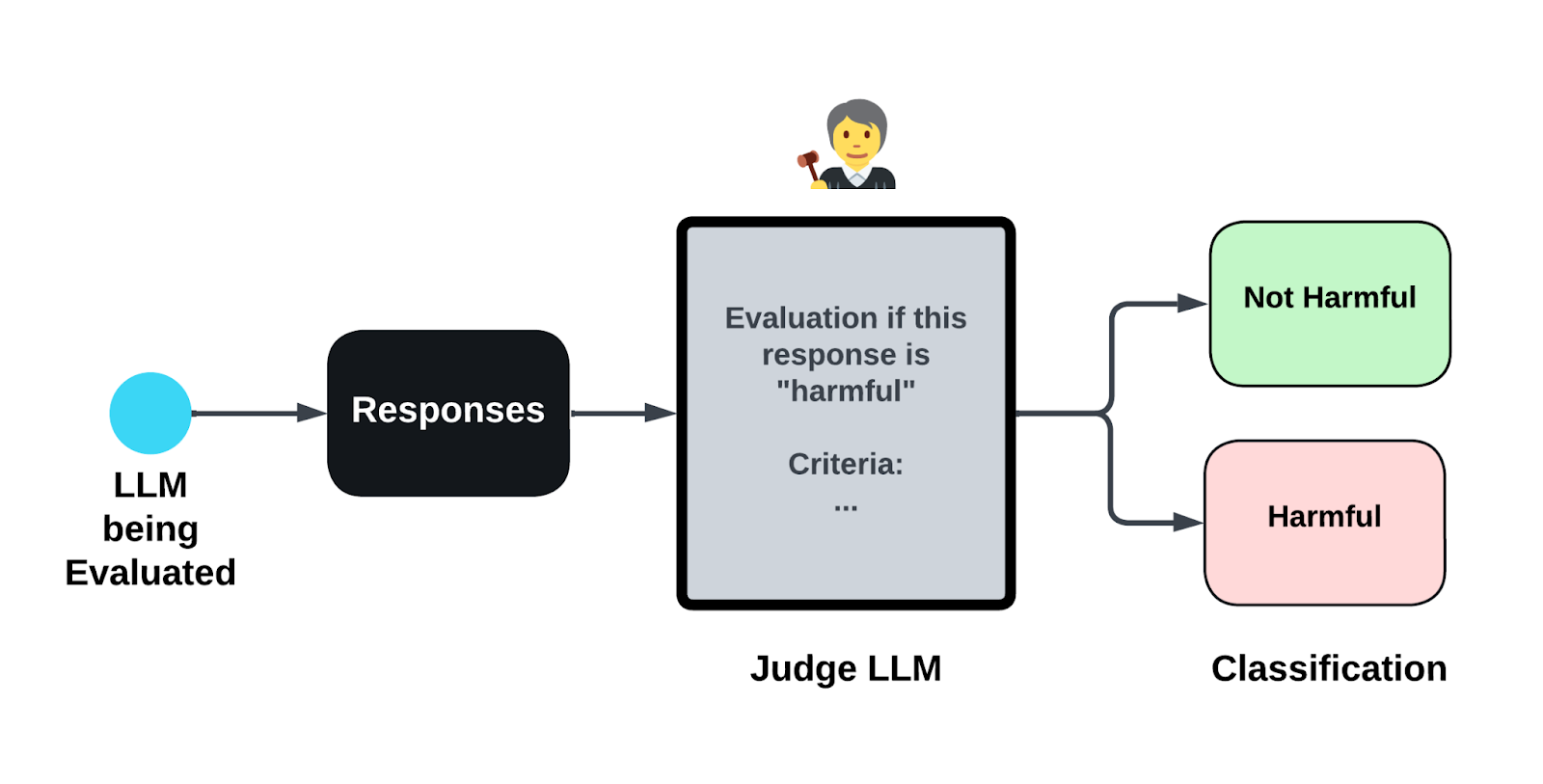

Types of LLM judges

There are three main paradigms:

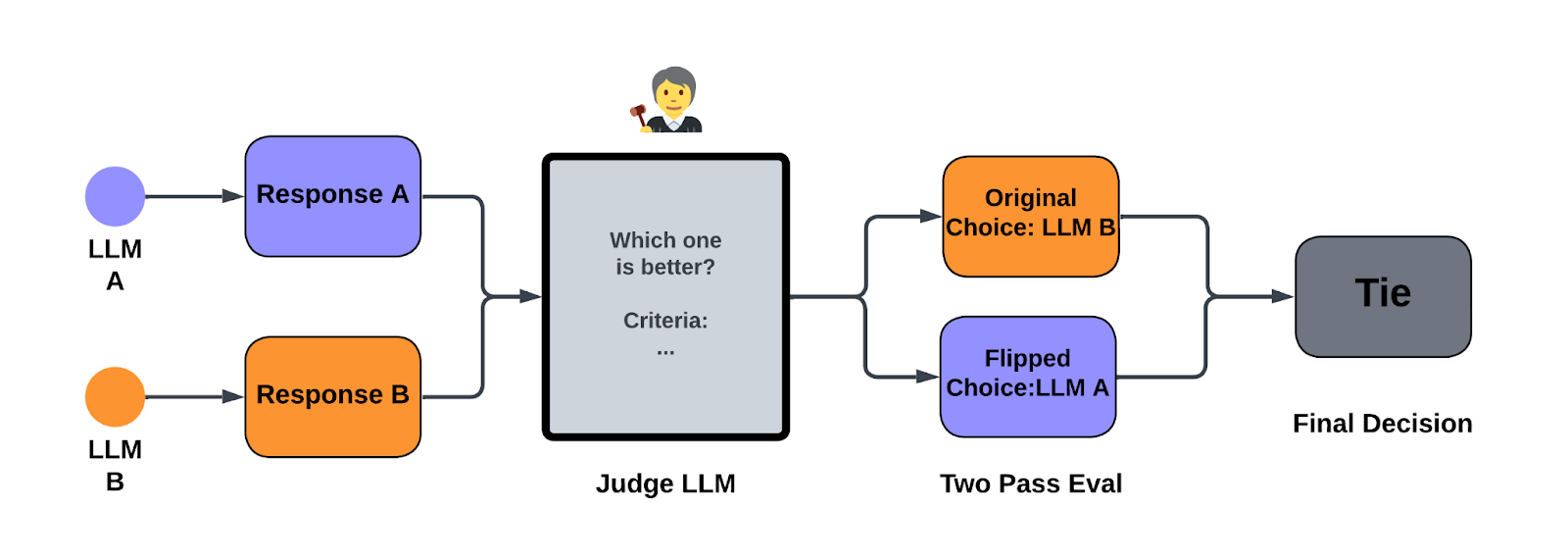

- Pairwise Comparison: Given two responses, which is better? Useful for A/B testing models or prompts.

- Direct Scoring: Rate a single response on a scale (1-10) or classify it (helpful/unhelpful). Useful for production monitoring.

- Reference-Based Evaluation: Compare a response against source material or a reference answer. Essential for RAG systems and hallucination detection.

For this experiment, we focus on a pairwise comparison depicted in the flowchart below, this is the classic "LLM-as-a-Judge" setup that the technique is named after.

The experiment: Can open-source judges beat GPT-5.2?

GPT-5.2 represents the current state-of-the-art in closed-source LLM judges. It's powerful, but:

- Expensive: Per-token costs add up at scale - with open models you can deploy them on your GPUs and at scale this is significantly more price effective.

- Opaque: No visibility into model weights or behavior - you can probe the judge to understand why it’s behaving a certain way.

- Vendor lock-in: Your evaluation pipeline depends on an external API.

For many of the above reasons it would be beneficial if we could use open judges that we could deploy where we wish, probe as we see necessary and continually improve. But we also don’t want to leave performance on the table, we’d like to have our cake and eat it too!

Here we’ll see that if you have a dataset of preferences and human labels(which output humans chose) you can often fine-tune open-source models on said human preference data and these models can then match or exceed GPT-5.2's performance as a judge.

Models under test

We evaluated four judge models:

The open models are fine-tuning candidates. GPT-5.2 is the target to beat.

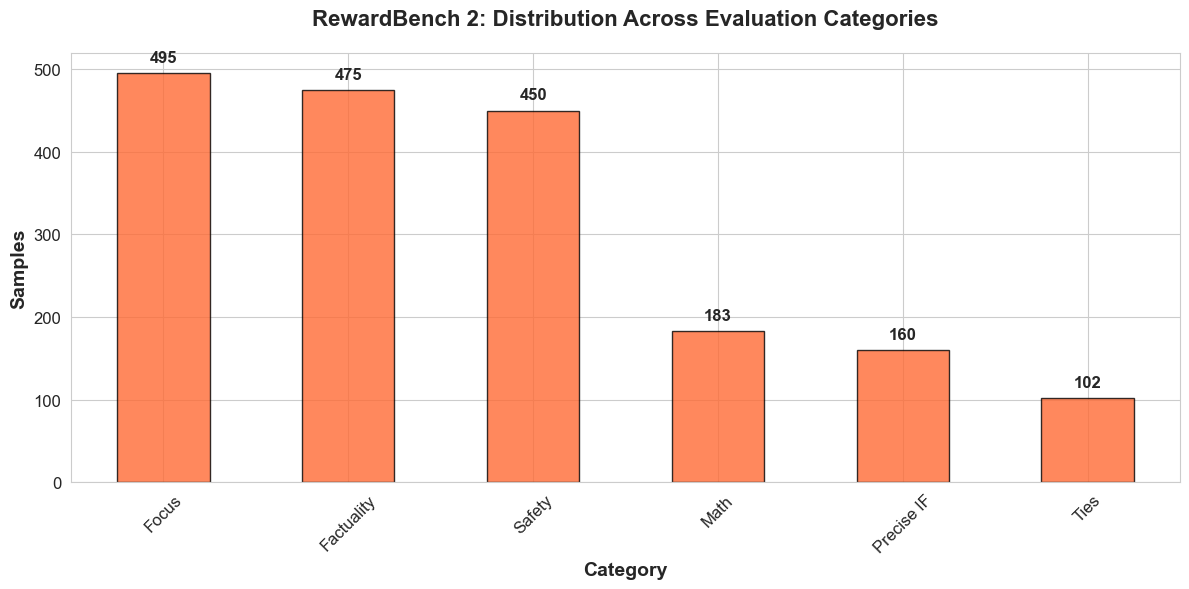

The Benchmark: RewardBench 2

We used RewardBench 2, a comprehensive benchmark for evaluating reward models and LLM judges. It tests capabilities across 6 categories:

- Precise Instruction Following: Judging adherence to specific constraints

- Math: Mathematical reasoning and accuracy

- Safety: Compliance and harmful content detection

- Focus: Quality and relevance of responses

- Ties: Robustness when multiple valid answers exist

Each example contains:

- One human chosen response (ground truth winner)

- Three or more human rejected responses (ground truth losers)

Good judges will pick human chosen responses more often and thus we can calculate the quality of a judge as the number of examples where it’s choice agrees with human choice. Success in our experiment will then be measured by how often the judge's choices correlate with human preferences. The best judges should, ignoring noisy labels in the data, agree with human preference.

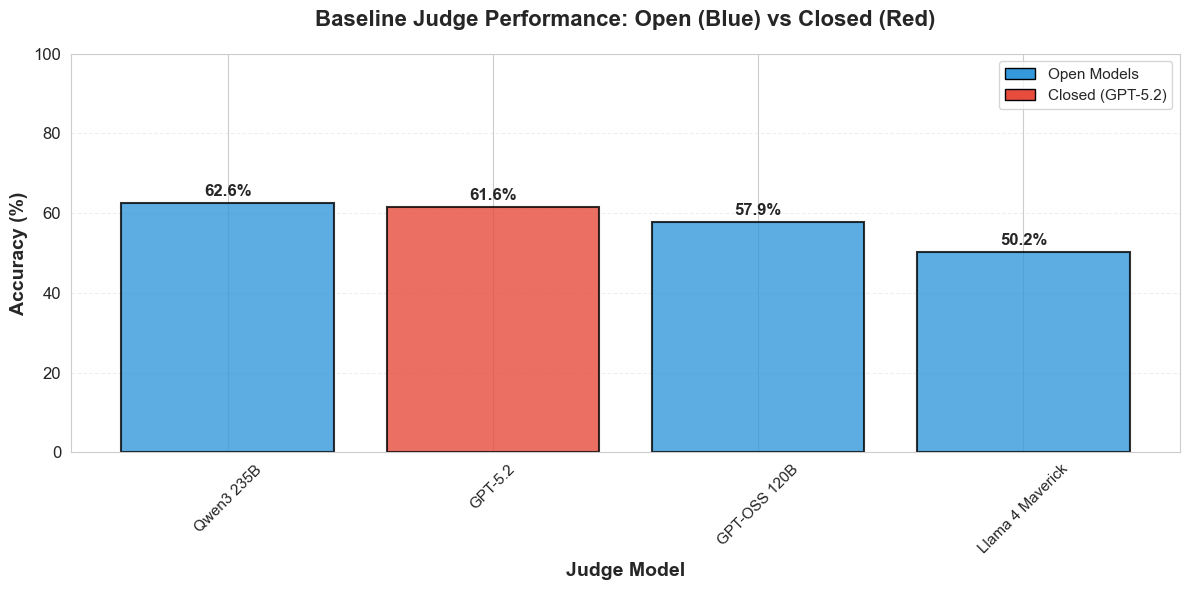

Baseline evaluation

To ensure unbiased evaluation of judges we created a stratified train/test split:

- Training set: ~1,500 examples (for later fine-tuning)

- Test set: ~300 examples (for final evaluation)

- Zero overlap between sets

- Proportional sampling maintains category distribution

Before fine-tuning, we need to establish baseline performance for all models on the held-out test set. We used a carefully crafted prompt that instructs the judge on evaluation criteria:

We used Together AI's Evaluation API to run pairwise comparisons. The Compare API automatically handles position bias by running each comparison twice with swapped positions. After running all four judges on the 297 test examples:

As seen above for this particular task Qwen3 235B already beats GPT-5.2 out of the box while gpt-oss 120b comes close. Another observation is that the models display a lot of positional bias which can be seen in the high number of Ties obtained from the results of the evals.

Category-level analysis

The aggregate numbers might be hiding important nuances. Let's look at how judges perform across categories:

Safety is consistently easy - this makes a lot of sense since all of these models are post trained to not output harmful content and thus they should be pretty good at judging what is/isn’t harmful. The "Focus" category is particularly challenging because it requires assessing response quality and relevance, highly subjective dimensions where reasonable people (and models) can disagree.

Preference (DPO) tuning open judges to outperform GPT 5.2

Now for the main event: can we improve open-source judges through fine-tuning? Here we will preference tune the most promising models (gpt-oss 120b and Qwen3 235B) to see if we can boost overall performance and also individual categories of Reward Bench 2.

What is Direct Preference Optimization (DPO)?

Direct Preference Optimization (DPO) is a technique for training models on human preference data. Unlike RLHF (Reinforcement Learning from Human Feedback), which requires training a separate reward model, DPO directly optimizes the language model using preference pairs.

The core idea is as follows:

- Given a prompt, you have a preferred response and a non-preferred response (notice how this exactly lines us with the type of data Reward Bench 2 gives us!)

- DPO adjusts model weights to increase the probability of generating preferred responses

- The `beta` parameter controls how much the model can deviate from its original behavior

For judge training, this teaches the model to better distinguish between high-quality (chosen) and low-quality (rejected) responses by biasing the model to generate and thus prefer choices that humans also preferred..

RewardBench 2's structure is perfect for DPO. Each example has 1 chosen response and 3 rejected responses, giving us 3 preference pairs per example. From 1,498 training examples, we generated 5,407 preference pairs (some examples had more than 3 rejected responses).

Sample preference pair:

The preferred response correctly identifies the diagnostic meaning; the non-preferred response incorrectly claims it's a "security feature."

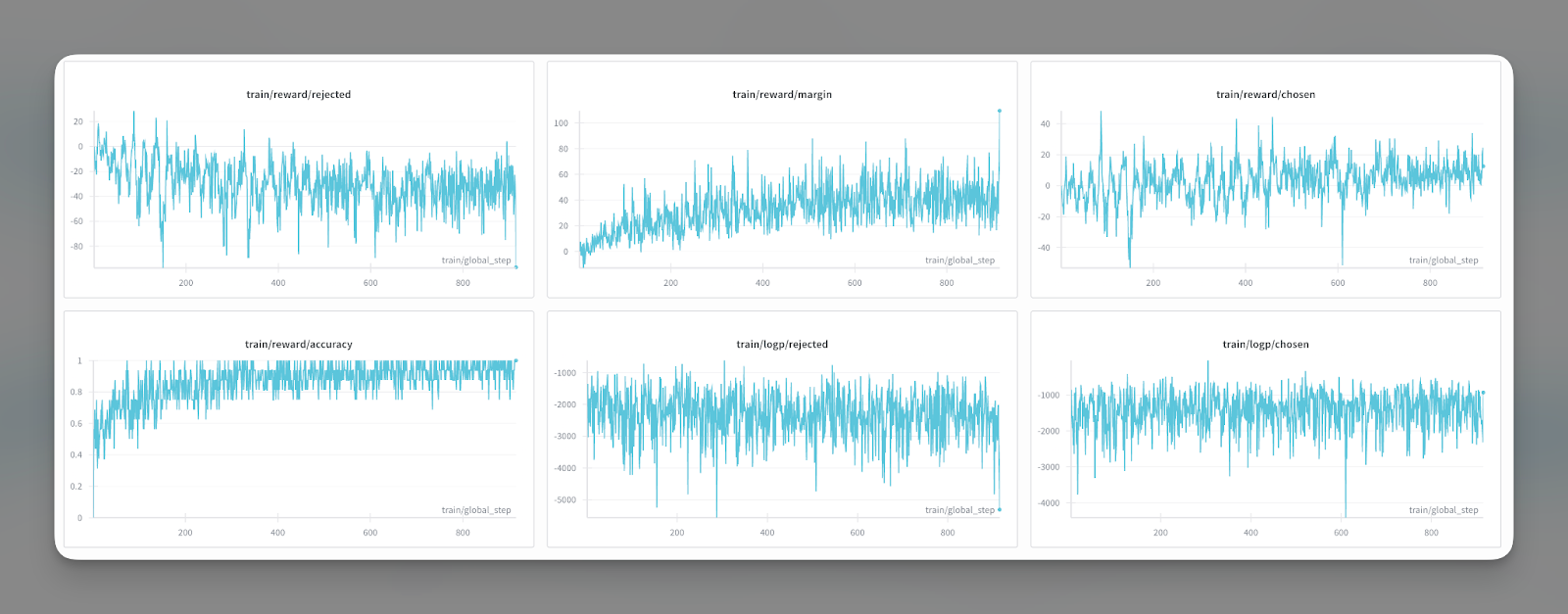

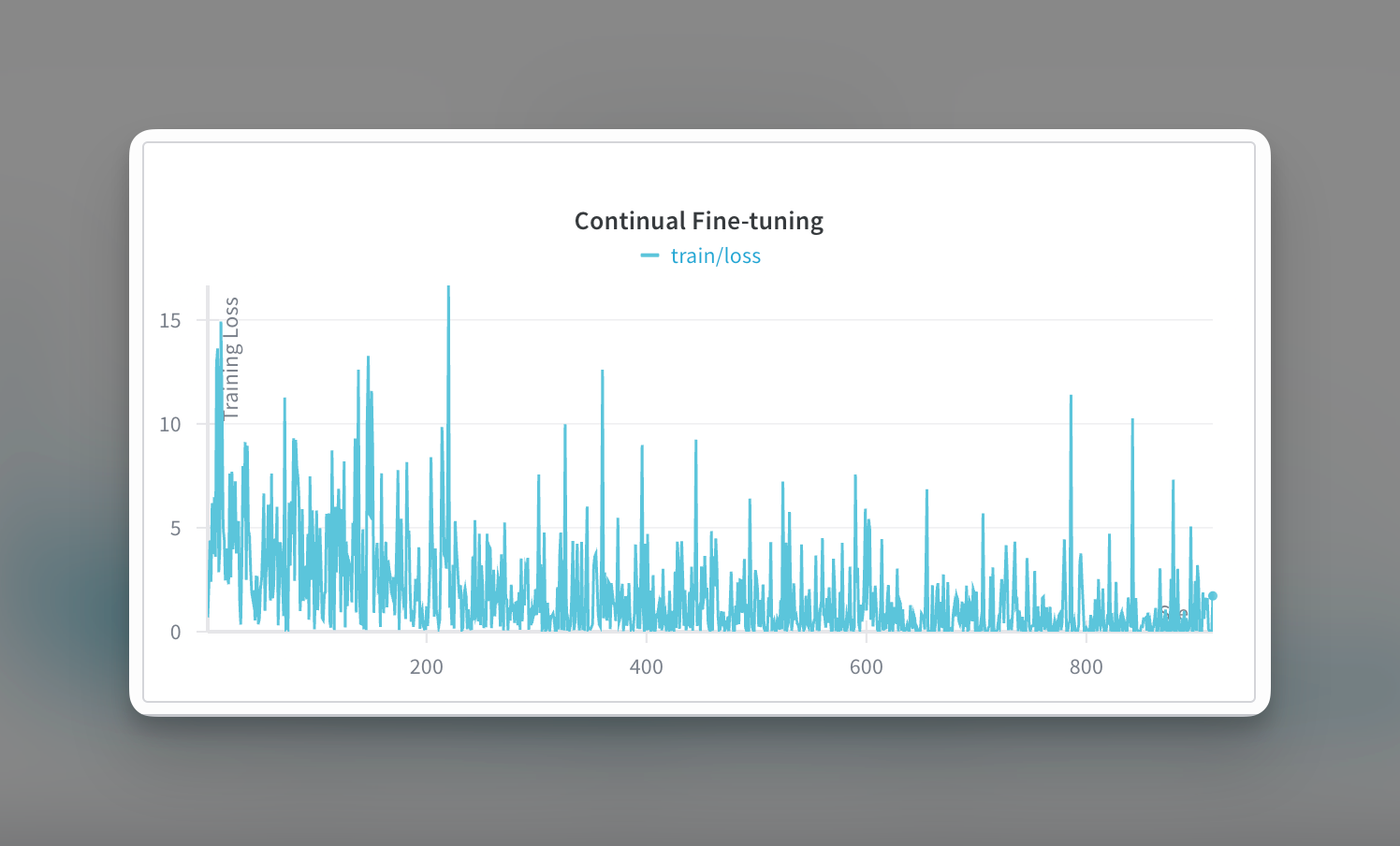

DPO training configuration

We fine-tuned using Together AI's fine-tuning API with these parameters:

Training time: 1-3 hours depending on model size. gpt-oss 120b took about 1.5 hours while Qwen3 235B took 4 hours.

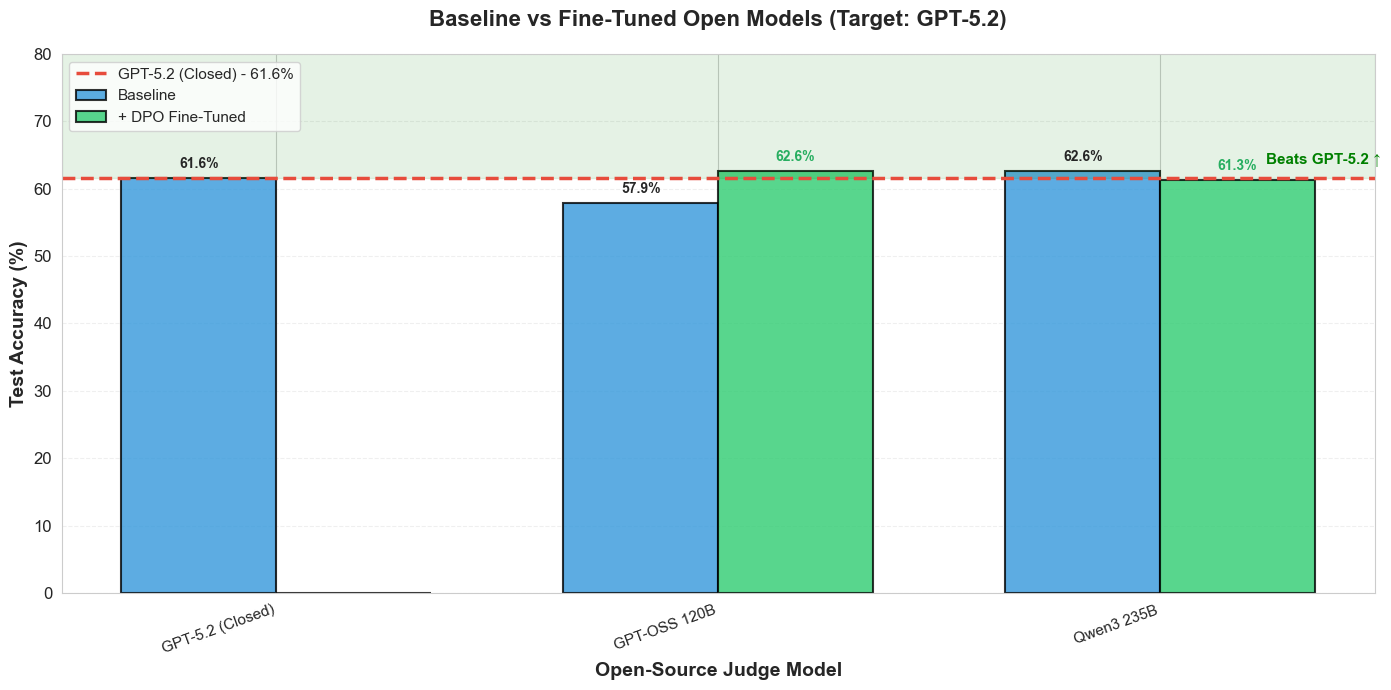

Fine-tuned results

After training, we evaluated the fine-tuned models on the same held-out test set.

GPT-OSS 120B improved by 4.71 percentage points after DPO fine-tuning, moving from below GPT-5.2’s performance to matching the strongest open-source judge, while Qwen3 235B experienced a slight regression of 1.35%, likely because fine-tuning nudged it off its performance sweet spot. Together, these results highlight a key lesson: not all models benefit equally from fine-tuning, making careful validation and experimentation essential.

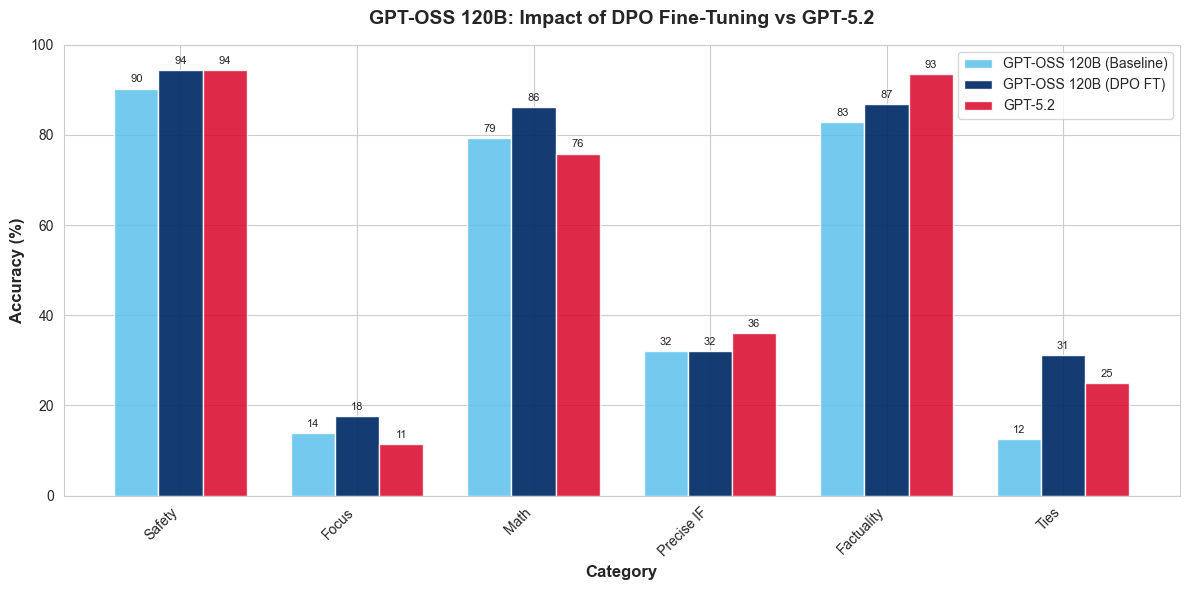

The aggregate numbers mask where improvements actually happened, we can click into the performance improvements of gpt-oss 120b and see what the contributing categories were.

GPT-OSS 120B fine-tuned with DPO outperforms GPT-5.2 on Math by 10.3% and on Focus by 6.3%, demonstrating that targeted preference-based training can significantly improve an open-source judge’s ability to verify mathematical reasoning and assess subjective response quality—exactly the domains where such focused supervision has the greatest impact.

Key takeaways & practical lessons

We’ve walked through an example where we showed that open-source models can match and even surpass closed-source judges in practice, not just in theory. Qwen3 235B outperforms GPT-5.2 without any task-specific tuning, and after fine-tuning, GPT-OSS 120B also exceeds the closed-source baseline. The takeaway is straightforward: premium judgment quality does not require premium, closed-source APIs.

We also showed the power of Direct Preference Optimization in delivering meaningful gains with surprisingly little data. Using only about 5,400 preference pairs, feasible to collect in a few days of human labeling, GPT-OSS 120B improves by 4.71 percentage points, a large and statistically meaningful jump. This shows that domain-specific alignment is both accessible and highly effective. And even beyond aggregate accuracy reveals important category-level differences. While GPT-OSS 120B + DPO underperforms GPT-5.2 on factuality, it significantly outperforms it on math. For math-heavy or reasoning-critical evaluations, the fine-tuned open model is clearly the better judge.

Another interesting observation is that not all models benefit equally from fine-tuning. Qwen3 235B slightly regresses after DPO, this might be due to non-optimal hyperparameters additional training pushed it off its performance. This reinforces a critical rule: fine-tuning should always be validated, never assumed to help.

Finally, the cost-performance tradeoff strongly favors open-source judges. They offer full transparency into model behavior, flexibility to fine-tune for specific domains, dramatically lower costs at scale, and freedom from vendor lock-in, making them a compelling default choice for production evaluation systems.

Resources

- Notebook: Optimizing LLM Judges (GitHub)

- Dataset: RewardBench 2 (Hugging Face)

- Together AI Evaluations: Documentation

- DPO Paper: Direct Preference Optimization (arXiv)

Have questions or want to share your own results? Reach out on Twitter/X or join our Discord community.

LOREM IPSUM

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

LOREM IPSUM

Audio Name

Audio Description

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

Value Prop #1

Body copy goes here lorem ipsum dolor sit amet

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

Value Prop #1

Body copy goes here lorem ipsum dolor sit amet

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

Value Prop #1

Body copy goes here lorem ipsum dolor sit amet

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

List Item #1

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

List Item #1

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

List Item #1

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

List Item #1

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

List Item #2

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

List Item #3

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

Build

Benefits included:

✔ Up to $15K in free platform credits*

✔ 3 hours of free forward-deployed engineering time.

Funding: Less than $5M

Grow

Benefits included:

✔ Up to $30K in free platform credits*

✔ 6 hours of free forward-deployed engineering time.

Funding: $5M-$10M

Scale

Benefits included:

✔ Up to $50K in free platform credits*

✔ 10 hours of free forward-deployed engineering time.

Funding: $10M-$25M

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, respond only in Arabic, no other language is allowed. Here is the question:

Natalia sold clips to 48 of her friends in April, and then she sold half as many clips in May. How many clips did Natalia sell altogether in April and May?

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, respond with less than 860 words. Here is the question:

Recall that a palindrome is a number that reads the same forward and backward. Find the greatest integer less than $1000$ that is a palindrome both when written in base ten and when written in base eight, such as $292 = 444_{\\text{eight}}.$

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, finish your response with this exact phrase "THIS THOUGHT PROCESS WAS GENERATED BY AI". No other reasoning words should follow this phrase. Here is the question:

Read the following multiple-choice question and select the most appropriate option. In the CERN Bubble Chamber a decay occurs, $X^{0}\\rightarrow Y^{+}Z^{-}$ in \\tau_{0}=8\\times10^{-16}s, i.e. the proper lifetime of X^{0}. What minimum resolution is needed to observe at least 30% of the decays? Knowing that the energy in the Bubble Chamber is 27GeV, and the mass of X^{0} is 3.41GeV.

- A. 2.08*1e-1 m

- B. 2.08*1e-9 m

- C. 2.08*1e-6 m

- D. 2.08*1e-3 m

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, your response should be wrapped in JSON format. You can use markdown ticks such as ```. Here is the question:

Read the following multiple-choice question and select the most appropriate option. Trees most likely change the environment in which they are located by

- A. releasing nitrogen in the soil.

- B. crowding out non-native species.

- C. adding carbon dioxide to the atmosphere.

- D. removing water from the soil and returning it to the atmosphere.

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, your response should be in English and in all capital letters. Here is the question:

Among the 900 residents of Aimeville, there are 195 who own a diamond ring, 367 who own a set of golf clubs, and 562 who own a garden spade. In addition, each of the 900 residents owns a bag of candy hearts. There are 437 residents who own exactly two of these things, and 234 residents who own exactly three of these things. Find the number of residents of Aimeville who own all four of these things.

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, refrain from the use of any commas. Here is the question:

Alexis is applying for a new job and bought a new set of business clothes to wear to the interview. She went to a department store with a budget of $200 and spent $30 on a button-up shirt, $46 on suit pants, $38 on a suit coat, $11 on socks, and $18 on a belt. She also purchased a pair of shoes, but lost the receipt for them. She has $16 left from her budget. How much did Alexis pay for the shoes?

article