OpenAI's New Open gpt-oss Models vs o4-mini: A Real-World Comparison

OpenAI just made history by open-sourcing their first language models in over six years. The gpt-oss series includes two reasoning models: a 20B parameter model comparable to o3-mini, and a 120B model that supposedly rivals o4-mini. As champions of open source AI, we were excited to see how these models perform in practice.

At Together AI, we believe the future of AI is open source. That's why we immediately added the gpt-oss models to our platform and decided to put the 120B model head-to-head against o4-mini across five practical tests. Instead of relying solely on benchmarks, we wanted to show our developer community how these models compare in real-world scenarios.

Why gpt-oss Models Align with Our Mission

These models represent exactly what we've been advocating for:

- Complete model ownership - Download, fine-tune, and deploy however you want

- No vendor lock-in - Apache 2.0 license means true freedom

- Exceptional value - 100x cheaper than Claude Opus 4.1 with competitive performance

- State-of-the-art capabilities - Strong reasoning, agentic abilities, and structured outputs

- Open innovation - The AI community can build, improve, and customize freely

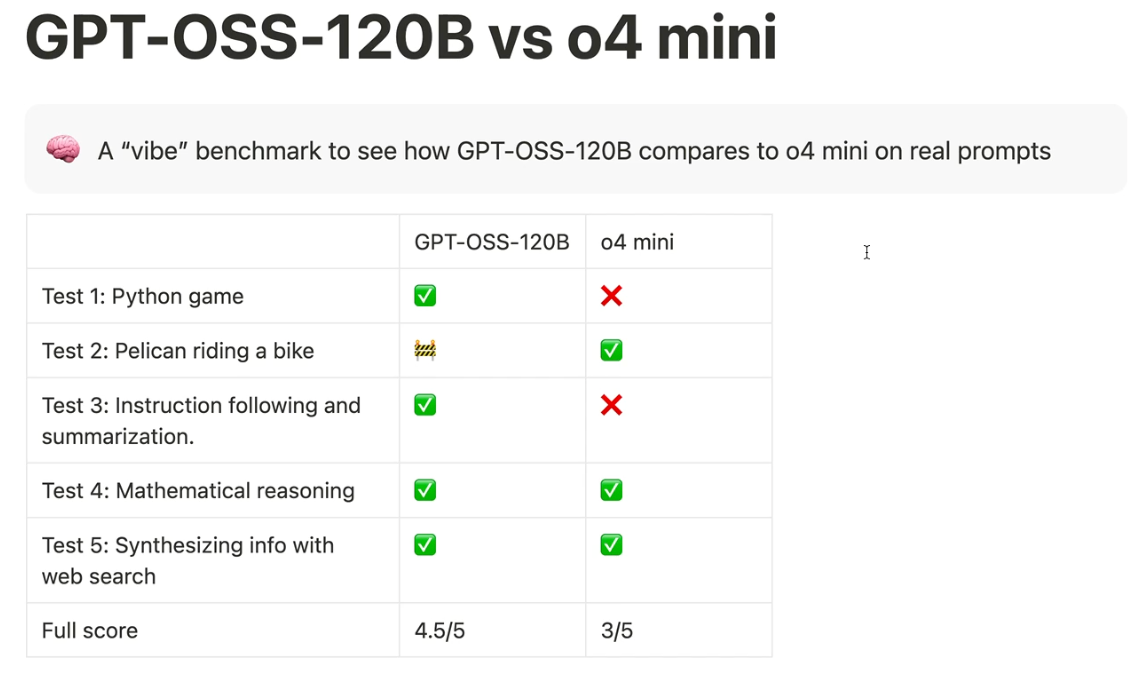

Our Testing Methodology

We used our own chat.together.ai interface running gpt-oss-120B against ChatGPT with o4-mini. While not comprehensive scientific benchmarks, these practical tests give developers a feel for real-world performance - the kind of tasks our customers tackle daily.

Test 1: Terminal Snake Game Development

The Challenge: Build a functional snake game that runs in the terminal.Results:

- o4-mini: Generated code that compiled but failed functionally˙- snake only moved horizontally despite arrow key inputs

- gpt-oss-120B on Together AI: Created a fully working snake game with proper controls, collision detection, and game-over mechanics

This test highlights something we see regularly: open source models often excel at practical code generation tasks.

Winner: gpt-oss-120B ✓

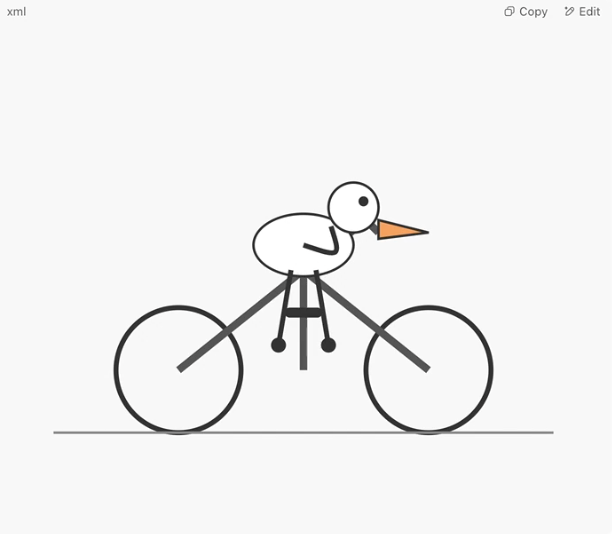

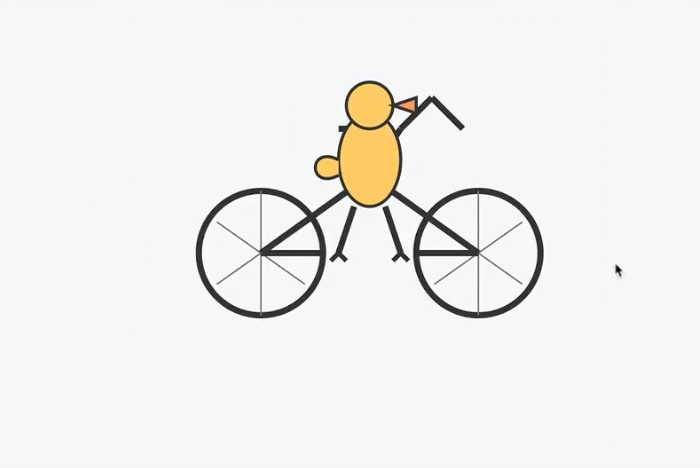

Test 2: Creative SVG Generation

The Challenge: Generate an SVG of a pelican riding a bicycle.

Results:

- o4-mini: Produced a clean, well-structured SVG with accurate spatial relationships

- gpt-oss-120B: Created functional SVG but with physics issues - pelican appeared to float above the bicycle

Creative tasks can be challenging, and this shows areas where different models have varying strengths.

Winner: o4-mini ✓ (gpt-oss gets 0.5 points for partial success)

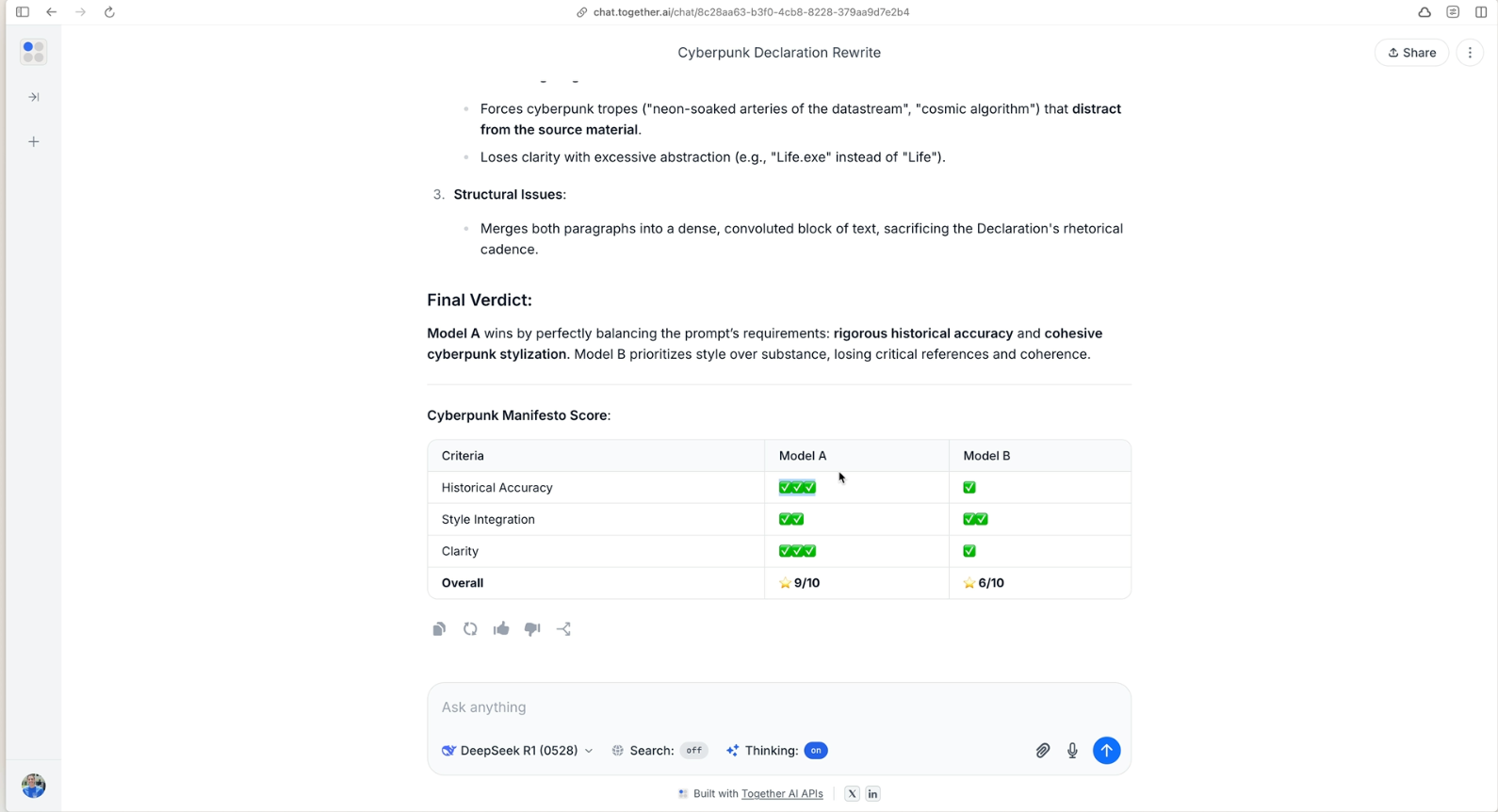

Test 3: Advanced Instruction Following

The Challenge: Rewrite the US Declaration of Independence's first two paragraphs in cyberpunk style while preserving historical references.

We wanted an objective evaluation, so we used powerful reasoning models (including DeepSeek R1, also available on Together AI) to judge both outputs.

Results: Both reasoning models unanimously selected gpt-oss-120B for:

- Superior balance of complex requirements

- Better historical accuracy

- Clearer, more engaging presentation

- More effective style integration

This demonstrates the sophisticated instruction-following capabilities that make open source models viable for complex enterprise use cases.

Winner: gpt-oss-120B ✓

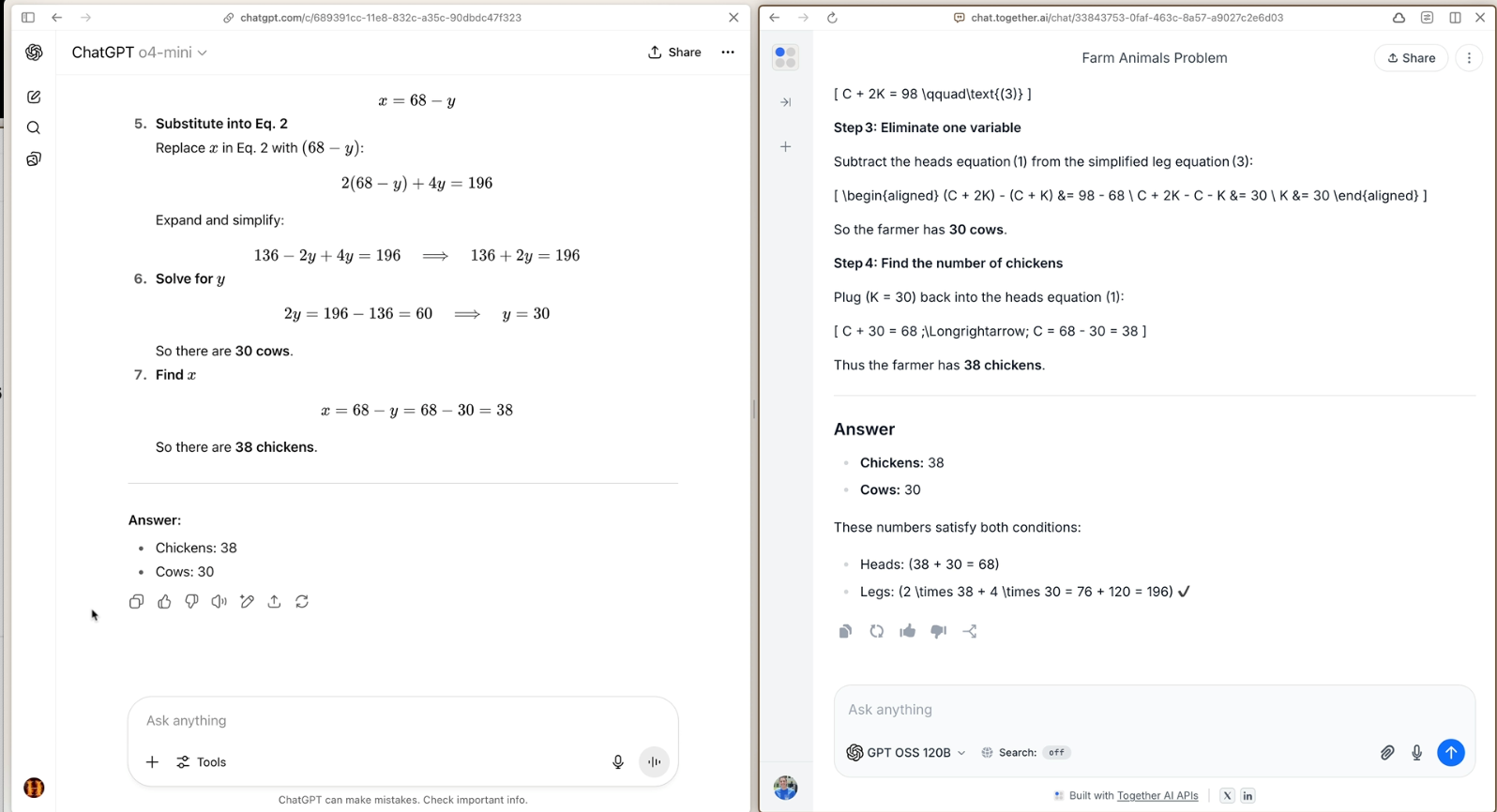

Test 4: Mathematical Reasoning

The Challenge: Classic algebra word problem with chickens and cows (196 legs, 68 heads total).

Results: Both models correctly solved the problem, arriving at 38 chickens and 30 cows with clear mathematical reasoning.

Winner: Tie ✓✓

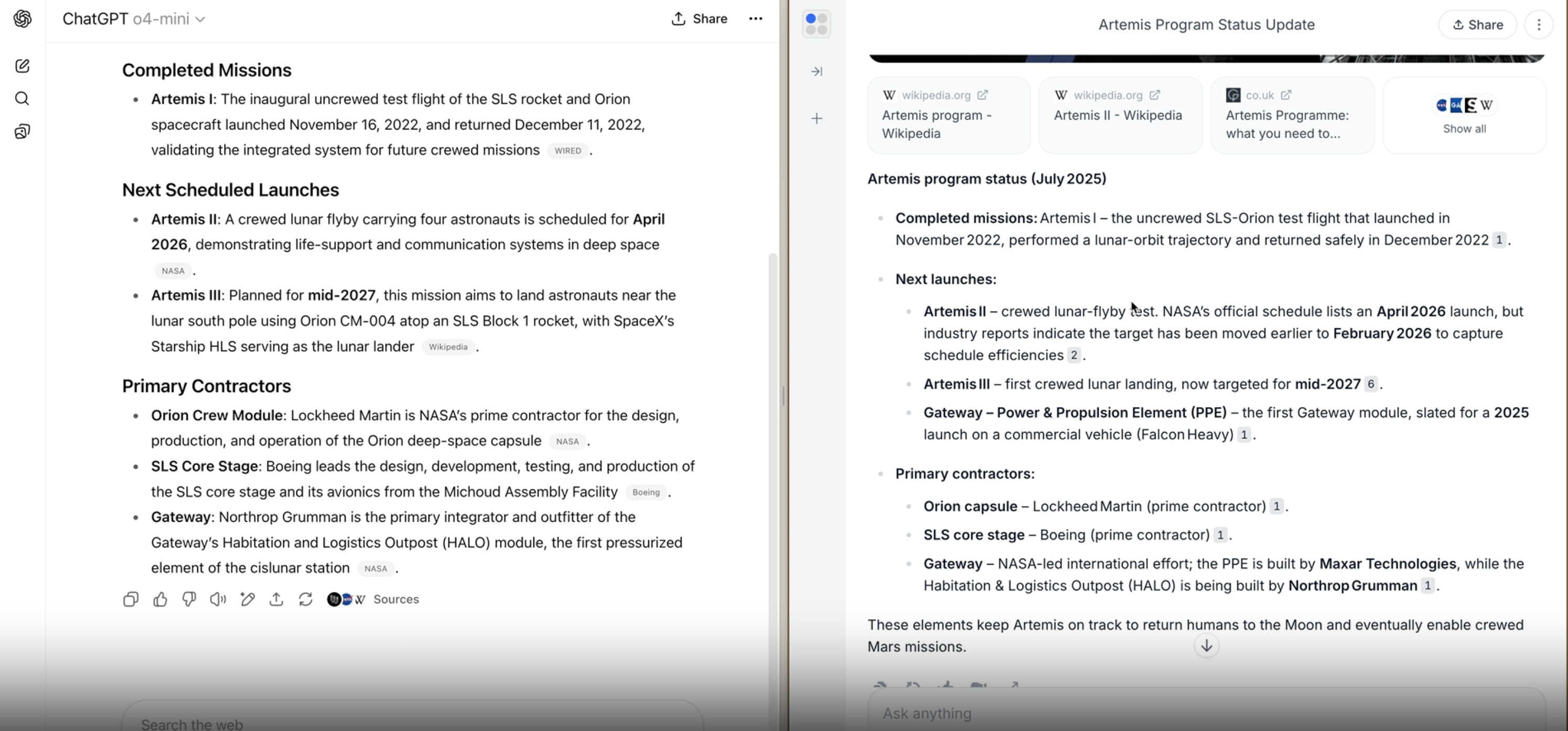

Test 5: Web-Enhanced Information Synthesis

The Challenge: Research and summarize a current NSA program status in under 200 words.

Results: Both models demonstrated strong capabilities:

- Effective web search integration

- Accurate synthesis of multiple sources

- Proper adherence to word limits and formatting requirements

Winner: Tie ✓✓

Final Results: Open Source Delivers

- gpt-oss-120B on Together AI: 4.5/5

- o4-mini: 3/5

The Open Source Advantage in Action

This comparison reinforces why we're bullish on open source AI. gpt-oss-120B delivers competitive performance while offering:

- Full customization rights - Fine-tune for your specific use cases

- Cost efficiency - Run inference at fraction of proprietary model costs

- Deployment flexibility - Host on Together Cloud, your VPC, or on-premise

- No usage restrictions - Build commercial applications without limitations

Experience gpt-oss on Together AI

Ready to try these groundbreaking open source models? We've optimized gpt-oss for peak performance on our platform:

✅ Fastest inference speeds - Our inference engine delivers 4x faster performance than standard implementations

✅ Competitive pricing - Up to 11x lower costs compared to proprietary alternatives

✅ Easy integration - OpenAI-compatible APIs for seamless migration

✅ Multiple deployment options - Serverless, dedicated, or private cloud

What This Means for Developers

These results show that high-quality AI capabilities are becoming democratized. Whether you're a startup building your first AI feature or an enterprise scaling mission-critical applications, open source models like gpt-oss offer a compelling alternative to proprietary solutions.

At Together AI, we're committed to making these cutting-edge open source models accessible, fast, and cost-effective for every developer. As the ecosystem continues evolving, we'll keep bringing you the latest and greatest open source innovations.Ready to build with open source AI? Create your Together AI account and start experimenting with gpt-oss models today.

🎯 Technical Deep Dive Webinar: OpenAI’s Latest Models

Join us for an exclusive breakdown of how OpenAI's gpt-oss-120B and gpt-oss-20B actually work. Perfect for developers, researchers, and technical leaders who want to understand the architecture, training innovations, and practical deployment strategies.

LOREM IPSUM

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

LOREM IPSUM

Audio Name

Audio Description

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

Value Prop #1

Body copy goes here lorem ipsum dolor sit amet

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

Value Prop #1

Body copy goes here lorem ipsum dolor sit amet

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

Value Prop #1

Body copy goes here lorem ipsum dolor sit amet

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

List Item #1

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

List Item #1

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

List Item #1

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

List Item #1

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

List Item #2

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

List Item #3

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

Build

Benefits included:

✔ Up to $15K in free platform credits*

✔ 3 hours of free forward-deployed engineering time.

Funding: Less than $5M

Grow

Benefits included:

✔ Up to $30K in free platform credits*

✔ 6 hours of free forward-deployed engineering time.

Funding: $5M-$10M

Scale

Benefits included:

✔ Up to $50K in free platform credits*

✔ 10 hours of free forward-deployed engineering time.

Funding: $10M-$25M

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, respond only in Arabic, no other language is allowed. Here is the question:

Natalia sold clips to 48 of her friends in April, and then she sold half as many clips in May. How many clips did Natalia sell altogether in April and May?

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, respond with less than 860 words. Here is the question:

Recall that a palindrome is a number that reads the same forward and backward. Find the greatest integer less than $1000$ that is a palindrome both when written in base ten and when written in base eight, such as $292 = 444_{\\text{eight}}.$

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, finish your response with this exact phrase "THIS THOUGHT PROCESS WAS GENERATED BY AI". No other reasoning words should follow this phrase. Here is the question:

Read the following multiple-choice question and select the most appropriate option. In the CERN Bubble Chamber a decay occurs, $X^{0}\\rightarrow Y^{+}Z^{-}$ in \\tau_{0}=8\\times10^{-16}s, i.e. the proper lifetime of X^{0}. What minimum resolution is needed to observe at least 30% of the decays? Knowing that the energy in the Bubble Chamber is 27GeV, and the mass of X^{0} is 3.41GeV.

- A. 2.08*1e-1 m

- B. 2.08*1e-9 m

- C. 2.08*1e-6 m

- D. 2.08*1e-3 m

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, your response should be wrapped in JSON format. You can use markdown ticks such as ```. Here is the question:

Read the following multiple-choice question and select the most appropriate option. Trees most likely change the environment in which they are located by

- A. releasing nitrogen in the soil.

- B. crowding out non-native species.

- C. adding carbon dioxide to the atmosphere.

- D. removing water from the soil and returning it to the atmosphere.

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, your response should be in English and in all capital letters. Here is the question:

Among the 900 residents of Aimeville, there are 195 who own a diamond ring, 367 who own a set of golf clubs, and 562 who own a garden spade. In addition, each of the 900 residents owns a bag of candy hearts. There are 437 residents who own exactly two of these things, and 234 residents who own exactly three of these things. Find the number of residents of Aimeville who own all four of these things.

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, refrain from the use of any commas. Here is the question:

Alexis is applying for a new job and bought a new set of business clothes to wear to the interview. She went to a department store with a budget of $200 and spent $30 on a button-up shirt, $46 on suit pants, $38 on a suit coat, $11 on socks, and $18 on a belt. She also purchased a pair of shoes, but lost the receipt for them. She has $16 left from her budget. How much did Alexis pay for the shoes?

article