How Runware Scales Generative Video & Image APIs with Together AI's Flexible GPU Infrastructure

5-10x lower

pricing vs competitors

Same-day

GPU scaling for urgent launches

70%

cost reduction for customers

Executive Summary

Runware needed flexible GPU infrastructure to handle volatile AI model demand patterns while maintaining their industry-leading 5-10x pricing advantage.

They adopted Together AI's on-demand H100, H200, and B200 GPU access alongside their custom Sonic Inference Engine, enabling rapid model deployment without long-term hardware commitments.

As a result, they achieved same-day GPU scaling for urgent launches, multi-generational GPU testing capabilities, and 70% cost reduction for customers switching to open-source models—all while serving 4+ billion generated assets for 100,000+ developers.

About Runware

Runware is a high-performance generative video and image platform. Founded by infrastructure veterans with 15+ years building distributed systems at Vodafone and Booking.com, Runware combines custom-designed hardware with strategic cloud partnerships to serve 100,000+ developers and 300M end users, generating 4+ billion assets to date.

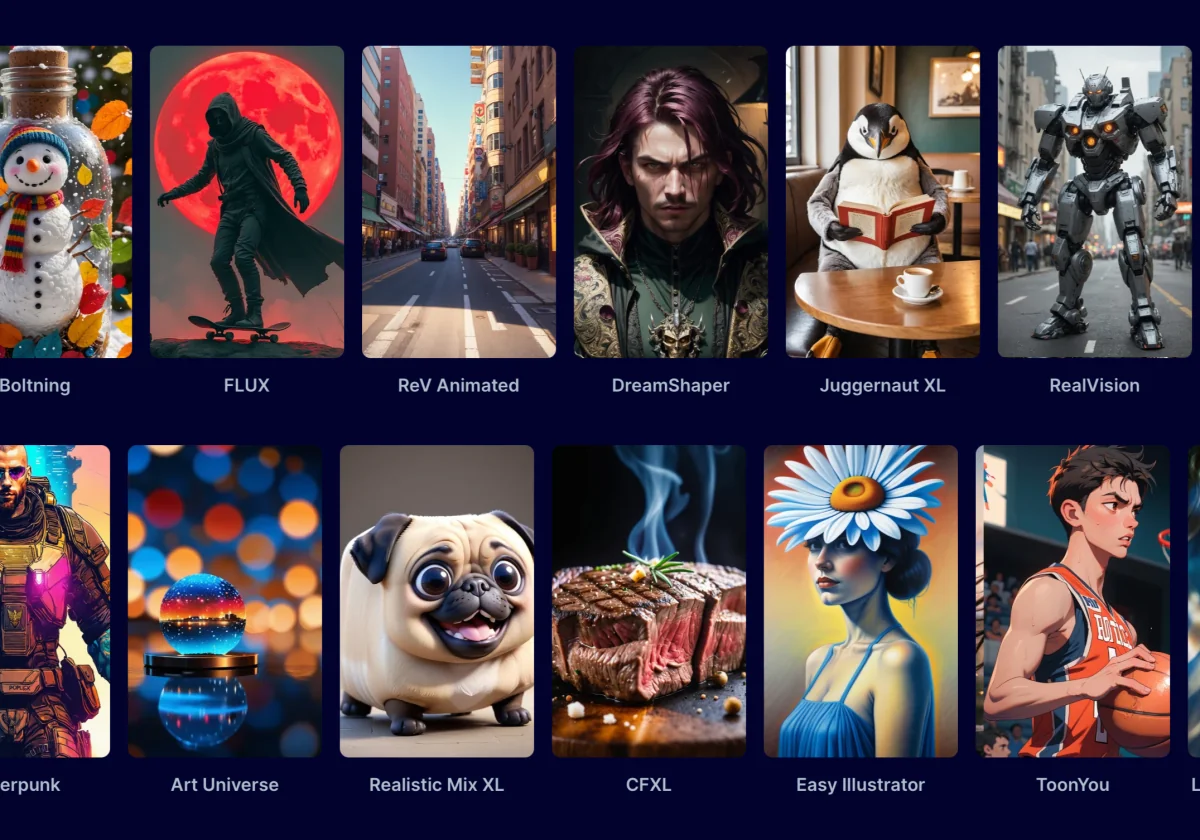

Their mission, "one API for all AI," enables developers to access any generative model (images, video, audio) with industry-leading speed at 5-10x lower cost than competitors.

The challenge

Runware’s custom infrastructure delivers exceptional throughput, but the AI model landscape changes daily:

MODEL VOLATILITY

New models launch constantly with unpredictable demand patterns. A day-zero release can spike capacity 10x overnight, requiring immediate GPU access that custom hardware procurement can't match.

GENERATIONAL GPU SHIFTS

Transitioning from NVIDIA H100s → H200s → B200s creates a dilemma: commit to purchasing new hardware before demand stabilizes, or risk performance gaps during model testing and validation.

MIXED SCALING PATTERNS

Large models (100GB+) require 4-8 GPU parallelization with stable clusters. Smaller models need rapid hot-loading across dynamic capacity — managing both patterns purely on custom infrastructure limits growth.

The solution

Runware adopted a hybrid strategy: the Sonic Inference Engine (Runware’s low-latency execution layer) paired with Together AI for on-demand GPU capacity.

Key benefits:

ON-DEMAND GPU ACCESS

Together provides immediate access to NVIDIA H100s, H200s, and B200s without long-term commitments. When Runware needed H200s urgently for a major model launch, Together's team responded same-day, enabling rapid deployment without hardware procurement delays.

TOP-TIER PERFORMANCE WITH FLEXIBILITY

Together delivers industry-leading GPU performance outside of Runware's custom stack, enabling new model deployments (Qwen, Kling) that match their internal benchmarks while maintaining the agility custom hardware can't provide.

MULTI-GPU TESTING

Together enables simultaneous testing across H100, H200, and B200 configurations, allowing Runware to identify optimal GPU types before committing to hardware purchases.

Results

By partnering with Together AI, Runware achieved:

5-10x lower pricing

than competitors by blending custom and cloud infrastructure

Same-day GPU scaling

for urgent model launches and capacity spikes

70% cost reduction

for customers switching to open-source models on Together GPUs

How Runware serves their customers with Together AI

Runware's partnership with Together AI directly benefits their end customers. Sourceful, a premium packaging design platform using Runware's APIs, needed high-quality image generation but faced prohibitive costs with closed-source models. Runware accelerated Qwen Image Edit Plus on Together AI's infrastructure, enabling Sourceful to achieve comparable quality at 70% lower cost. The company is now switching production workloads from closed-source to open-source models.

"Most GPU providers just want to sell you what they have. Together AI actually listens to what we need, which is critical when you're navigating the chaos of constant model launches. This flexibility is what enables us to maintain the lowest pricing in the industry." — Ioana Hreninciuc, Co-Founder, Runware

Use case details

Products used

Highlights

- 5-10x lower pricing

- Same-day GPU scaling

- 70% customer cost savings

- H100/H200/B200 access

- Multi-GPU testing enabled

Use case

On-demand GPU capacity for generative video & image APIs serving 100K+ developers

Company segment

AI-native infrastructure company