How The Washington Post Achieved AI Independence with Reliable Inference

2 seconds

response time

1.79B

monthly input tokens

Thousands

of queries per day

Executive Summary

The Washington Post needed reliable, independent AI inference for a public-facing journalism product.

They deployed open models on Together AI with dedicated endpoints and hybrid serverless capacity, maintaining full model control and predictable pricing.

As a result, they achieved consistent ~2-second responses, 1.79B tokens processed monthly, and enterprise-grade reliability for thousands of daily queries.

About The Washington Post

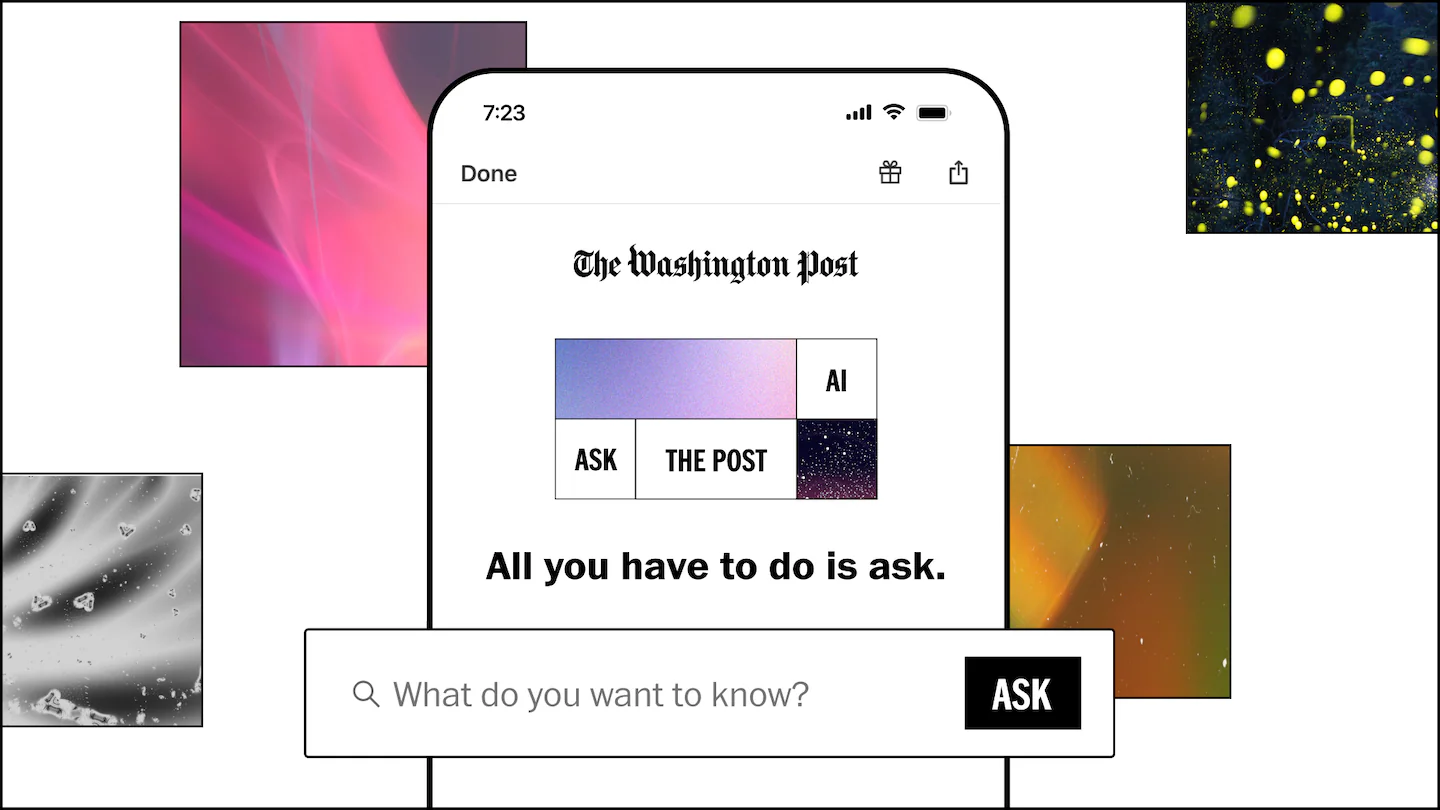

The Washington Post serves millions of users with award-winning journalism for all of America while pioneering AI innovation. Under CTO Vineet Khosla's leadership, The Post launched "Ask The Post AI" in November 2024 to deliver answers to users’ questions through the publication’s deeply-sourced, fact-based reporting powered by Together AI's model inference platform.

The Challenge

The Washington Post needed a reliable, independent model inference to power its AI journalism platform without vendor lock-in.

Critical Requirements:

- Enterprise-grade model inference handling thousands of daily queries

- Sub-10-second response times for real-time user interactions

- Complete model ownership vs. dependence on proprietary APIs

- Mission-critical reliability for public-facing journalism features

Why Inference Alternatives Failed:

- Proprietary Model Providers: Zero model control, unpredictable pricing, vendor dependency

- Hyperscalers: Limited large GPU availability, higher inference costs

- Self-Hosting Models: Massive MLOps overhead for model serving at scale

The Solution

Together AI's model inference platform delivered enterprise-grade performance with complete strategic independence.

Model Inference Infrastructure:

- Dedicated Endpoints: Custom-configured GPU infrastructure for reliable Llama and Mistral hosting

- Open-Source Model Access: Full control over model selection and deployment

- Fine-Tuning Capabilities: Available for future experimentation and optimization

- Hybrid Deployment: Combination of dedicated and serverless inference for optimal performance

Partnership Support: Together AI's engineering team provided direct collaboration on infrastructure optimization and custom configurations.

"Together AI's flexibility in creating customized configurations for our journalism use case has been invaluable to our success."

— Sam Han, Chief AI Officer, The Washington Post.

Results

Enterprise Impact Delivered

✓ Massive Scale Processing 1.79 billion input tokens processed monthly with consistent 2-second response times

✓ Cost Predictability Fixed monthly pricing vs. volatile per-token costs that scale unpredictably

✓ User Engagement Success Searches drive answer expansion clicks and source article clicks to enhance visibility into the sourced journalism

✓ Model Independence Own and control Llama/Mistral models vs. API dependency on proprietary providers

Performance Comparison

Use case details

Products used

Highlights

- 2-second responses

- 1.79B tokens per month

- Fixed monthly pricing

- Own Llama & Mistral models

- Hybrid dedicated + serverless

Use case

AI search over newsroom content

Company segment

Large enterprise