Blackwell Ultra AI Factory is now on Together AI

Scale your AI Factory with next-gen AI reasoning performance on NVIDIA GB300 NVL72 GPU clusters.

Why NVIDIA GB300 NVL72 on Together GPU Clusters?

Rack-scale reasoning performance. Delivered ready for production.

A single 72-GPU NVLink domain for reasoning at scale

GB300 NVL72 unifies 72 Blackwell Ultra GPUs and 36 Grace CPUs in one platform, optimized for test-time scaling inference.

800 Gb/s per-GPU networking

With NVIDIA ConnectX-8 SuperNIC, each GPU gets 800 Gb/s of RDMA networking over Quantum-X800 InfiniBand or Spectrum-X Ethernet, sustaining cluster-wide efficiency under heavy concurrency.

Purpose-built for AI reasoning throughput

Compared to Hopper, GB300 NVL72 delivers 10× higher TPS per user and 5× higher TPS per megawatt, combining to 50× higher AI-factory output—a step-change for production inference.

Massive on-rack memory for frontier contexts

Run long-context LLMs and agentic workloads with up to 21 TB of aggregate GPU HBM (up to 576 TB/s bandwidth) and up to 40 TB fast memory—headroom that keeps tokens flowing and batch sizes high.

Power-aware rack design

New rack-level power technology smooths AI workload spikes and can reduce peak grid demand by up to 30%, helping you scale sustainably without sacrificing performance.

Run by the same people pushing the Blackwell stack forward

Work with engineers who co‑develop Blackwell optimizations; our team continually tunes workloads and publishes cutting‑edge training breakthroughs.

"What truly elevates Together AI to ClusterMax™ Gold is their exceptional support and technical expertise. Together AI’s team, led by Tri Dao — the inventor of FlashAttention — and their Together Kernel Collection (TKC), significantly boost customer performance. We don’t believe the value created by Together AI can be replicated elsewhere without cloning Tri Dao.”

— Dylan Patel, Chief Analyst, SemiAnalysis

Built for the age of reasoning at AI Factory scale

GB300 NVL72 is a liquid-cooled, rack-scale system that merges Blackwell Ultra GPUs and Grace CPUs into a single NVLink domain, purpose-built for the age of reasoning.

Accelerated Compute

Blackwell Ultra Tensor Cores: 2x attention-layer acceleration and 1.5× more AI compute FLOPS than Blackwell, plus FP4 support for dense reasoning throughput.

NVLink 5 + NVSwitch: 130 TB/s rack-scale GPU fabric to minimize cross-GPU latency for sharded LLMs and MoE.

Data-center networking: 800 Gb/s per GPU via ConnectX-8 SuperNIC on Quantum-X800 InfiniBand or Spectrum-X Ethernet.

tps per user RELATIVE TO Hopper GPUS

tps per megawatt RELATIVE TO Hopper GPUS

AI PERFORMANCE RELATIVE TO GB200 NVL72

Technical Specs

NVIDIA GB300 NVL72

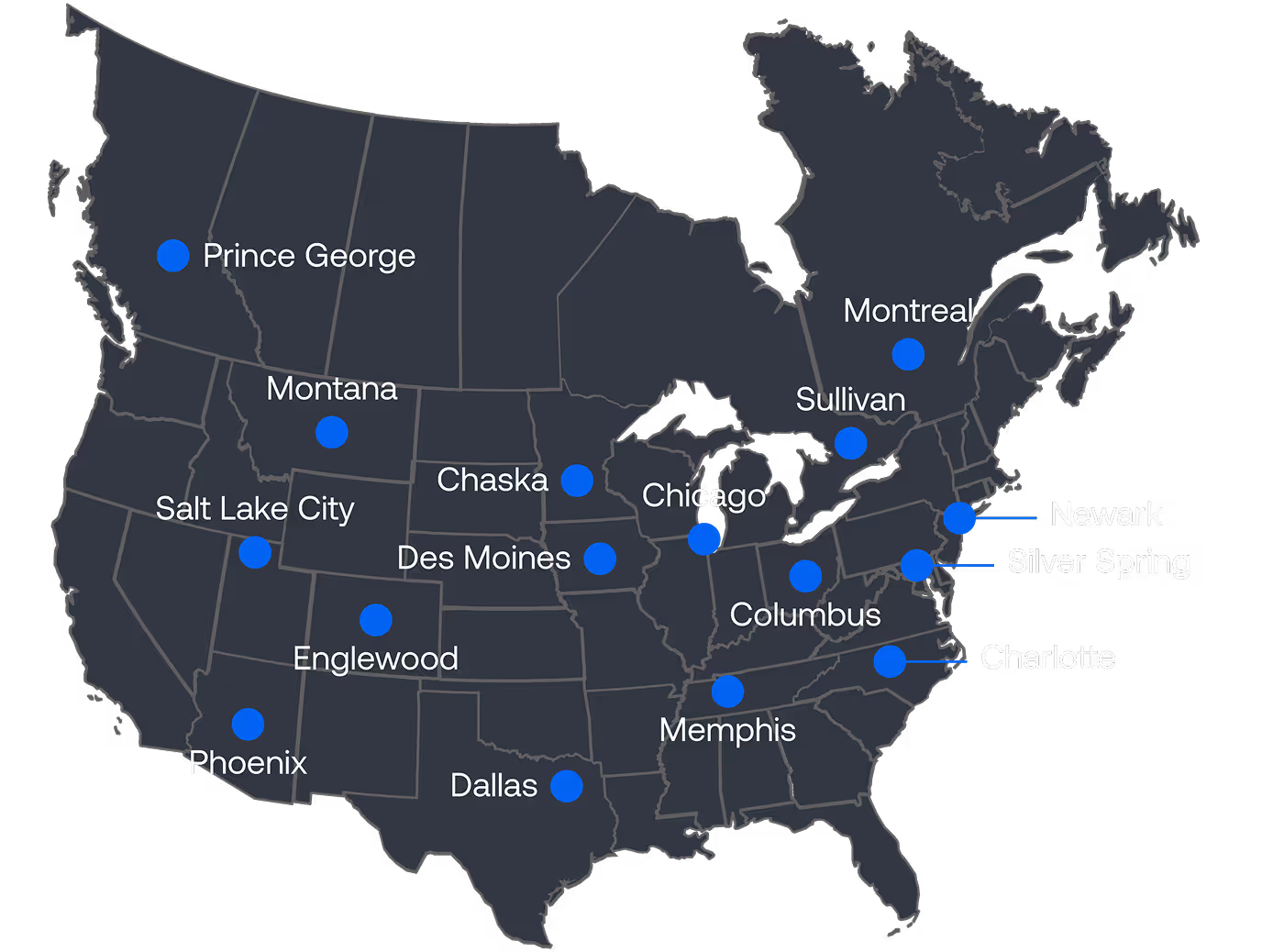

AI Data Centers and Power across North America

Data Center Portfolio

2GW+ in the Portfolio with 600MW of near-term Capacity.

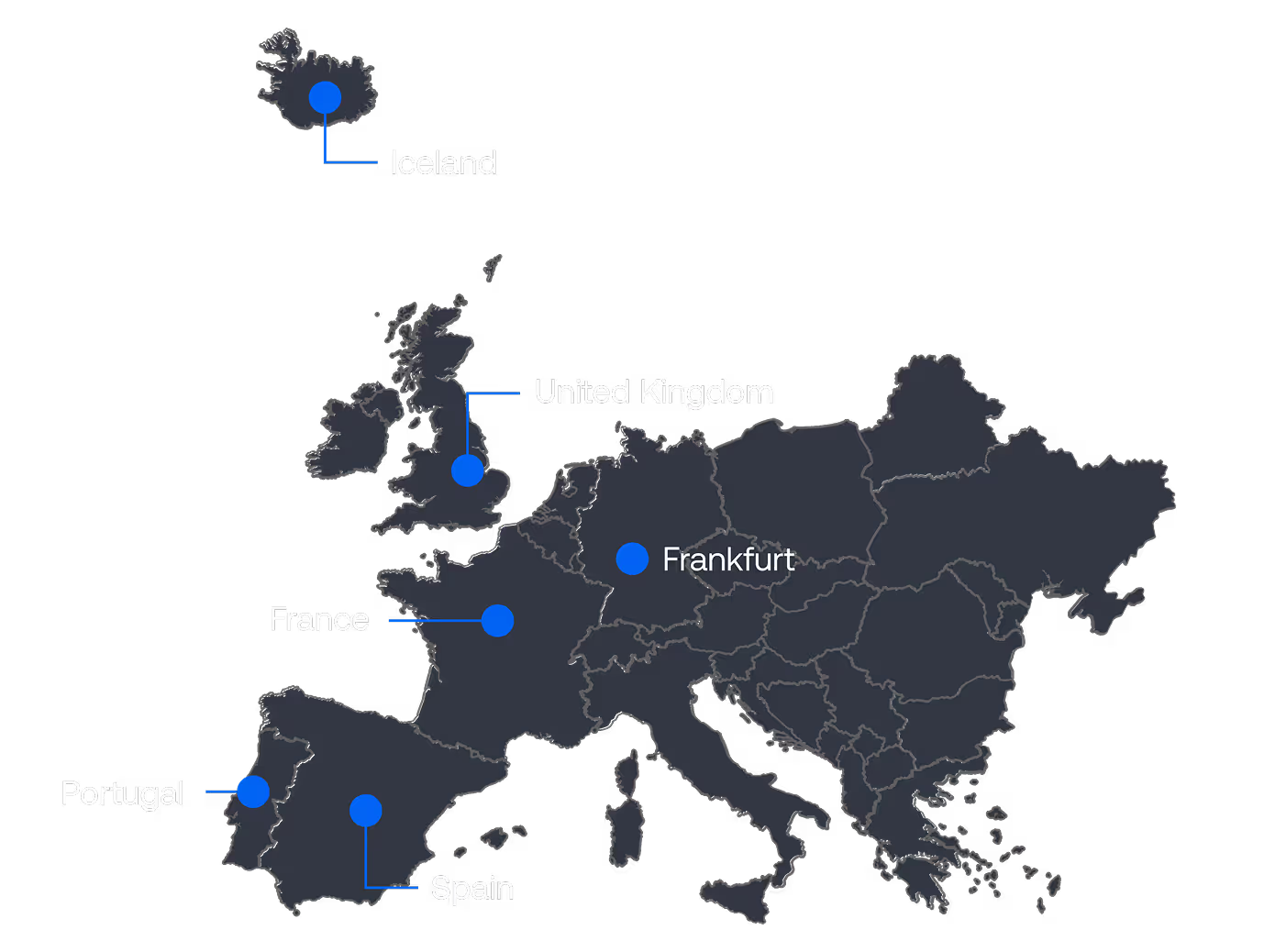

Expansion Capability in Europe and Beyond

Data Center Portfolio

150MW+ available in Europe: UK, Spain, France, Portugal, and Iceland also.

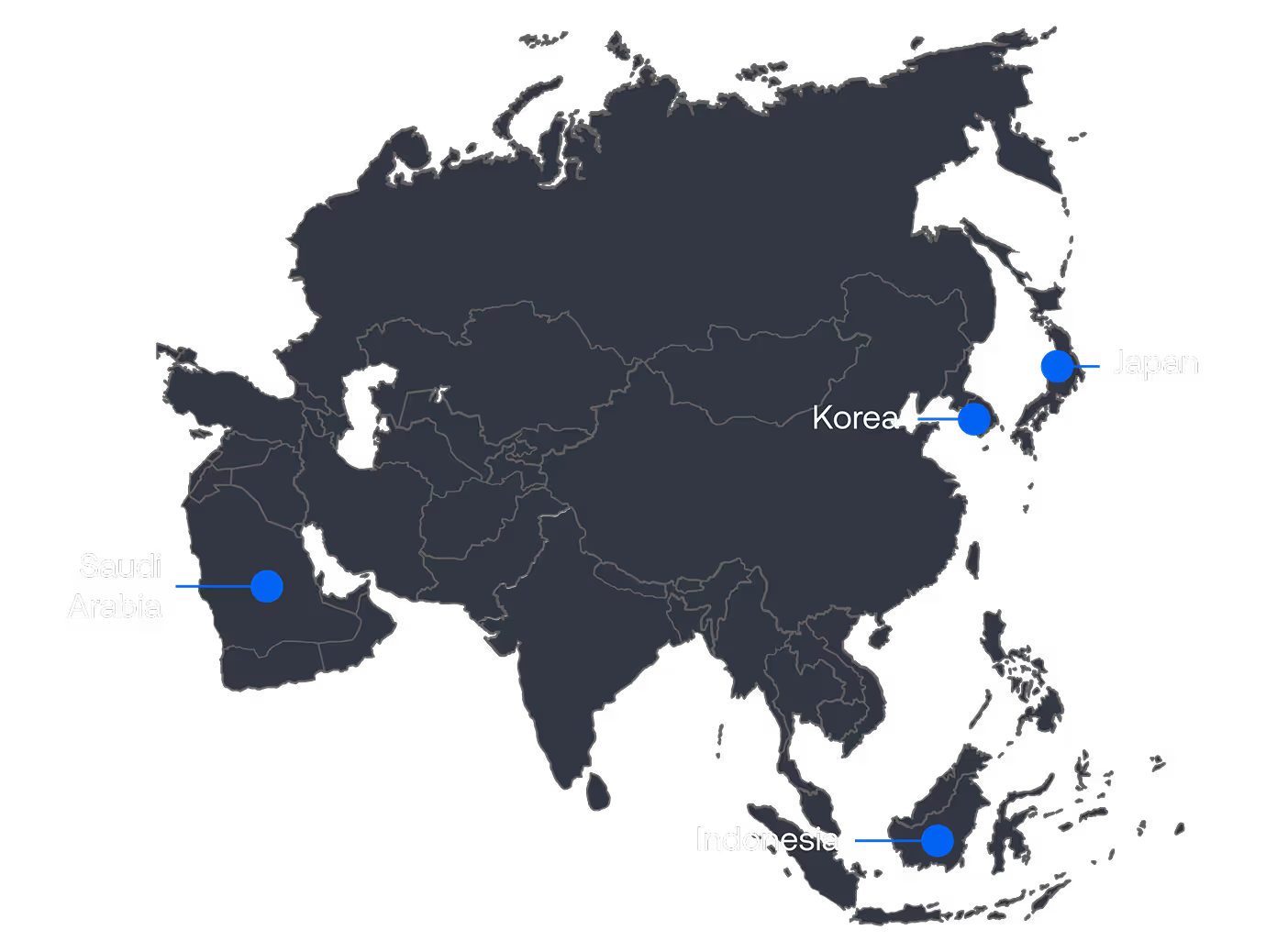

Next Frontiers – Asia and the Middle East

Data Center Portfolio

Options available based on the scale of the projects in Asia and the Middle East.

Powering AI Pioneers

Leading AI companies are ramping up with NVIDIA Blackwell running on Together AI.

Zoom partnered with Together AI to leverage our research and deliver accelerated performance when training the models powering various Zoom AI Companion features.

With Together GPU Clusters accelerated by NVIDIA HGX B200 Zoom, experienced a 1.9X improvement in training speeds out-of-the-box over previous generation NVIDIA Hopper GPUs.

Salesforce leverages Together AI for the entire AI journey: from training, to fine-tuning to inference of their models to deliver Agentforce.

Training a Mistral-24B model, Salesforce saw a 2x improvement in training speeds upgrading from NVIDIA HGX H200 to HGX B200. This is accelerating how Salesforce trains models and integrates research results into Agentforce.

During initial tests with the NVIDIA HGX B200, InVideo immediately saw a 25% improvement when running a training job from NVIDIA HGX H200.

Then, in partnership with our researchers, the team made further optimizations and more than doubled this improvement – making the step up to the NVIDIA Blackwell platform even more appealing.

Our latest research & content

Learn more about running turbocharged NVIDIA GB200 NVL72 GPU clusters on Together AI.

.avif)