DSGym: A holistic framework for evaluating and training data science agents

.png)

Summary

Current data science benchmarks rely on incompatible evaluation interfaces. Moreover, many tasks can be solved without using the underlying data. We address these limitations by introducing DSGym, an integrated framework for evaluating and training data science agents in self-contained execution environments. Using DSGym, we trained a state-of-the-art open-source data science agent.

arXiv paper: https://arxiv.org/abs/2601.16344

Github repo: https://github.com/fannie1208/DSGym

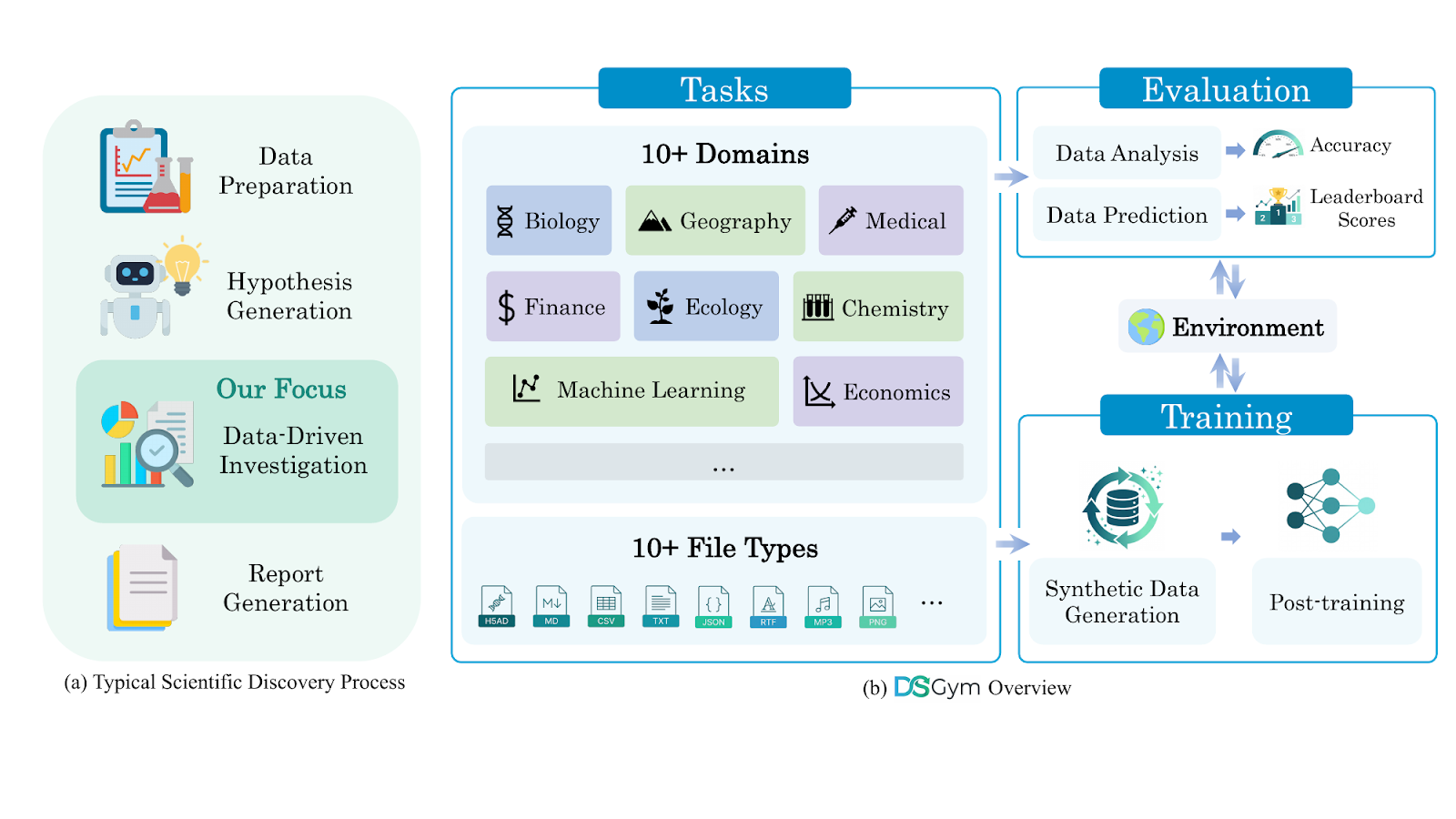

Data science serves as the computational engine of modern scientific discovery. However, evaluating and training LLM-based data science agents remains challenging because existing benchmarks assess isolated skills in heterogeneous execution environments, making integration costly and fair comparisons difficult.

We introduce DSGym, a unified framework that integrates diverse data science evaluation suites behind a single API with standardized abstractions for datasets, agents, and metrics. DSGym unifies and refines existing benchmarks while expanding the scope with novel scientific analysis tasks (90 bioinformatics tasks from academic literature) and challenging end-to-end modeling competitions (92 Kaggle competitions). Beyond evaluation, DSGym provides trajectory generation and synthetic query pipelines for agent training—we demonstrate this by training a 4B model on 2k generated examples, achieving state-of-the-art performance among open-source models.

Framework and datasets

One of the main contributions of DSGym is that it abstracts the complexity of code execution behind containers that can be allocated in real time to execute code safely; these containers come with pre-installed dependencies and data available for processing.

DSGym provides a unified JSON interface for all benchmarks, where each task is expressed as: data files, query prompt, evaluation metric, and metadata. We strive to make the design modular and straightforward. In this way adding new tasks, agent scaffolds, tools, and evaluation scripts should be simpler for users. The tasks in DSGym are categorized into two primary tracks:

- Data Analysis (query-answering via programmatic analysis).

- Data Prediction (end-to-end ML pipeline development).

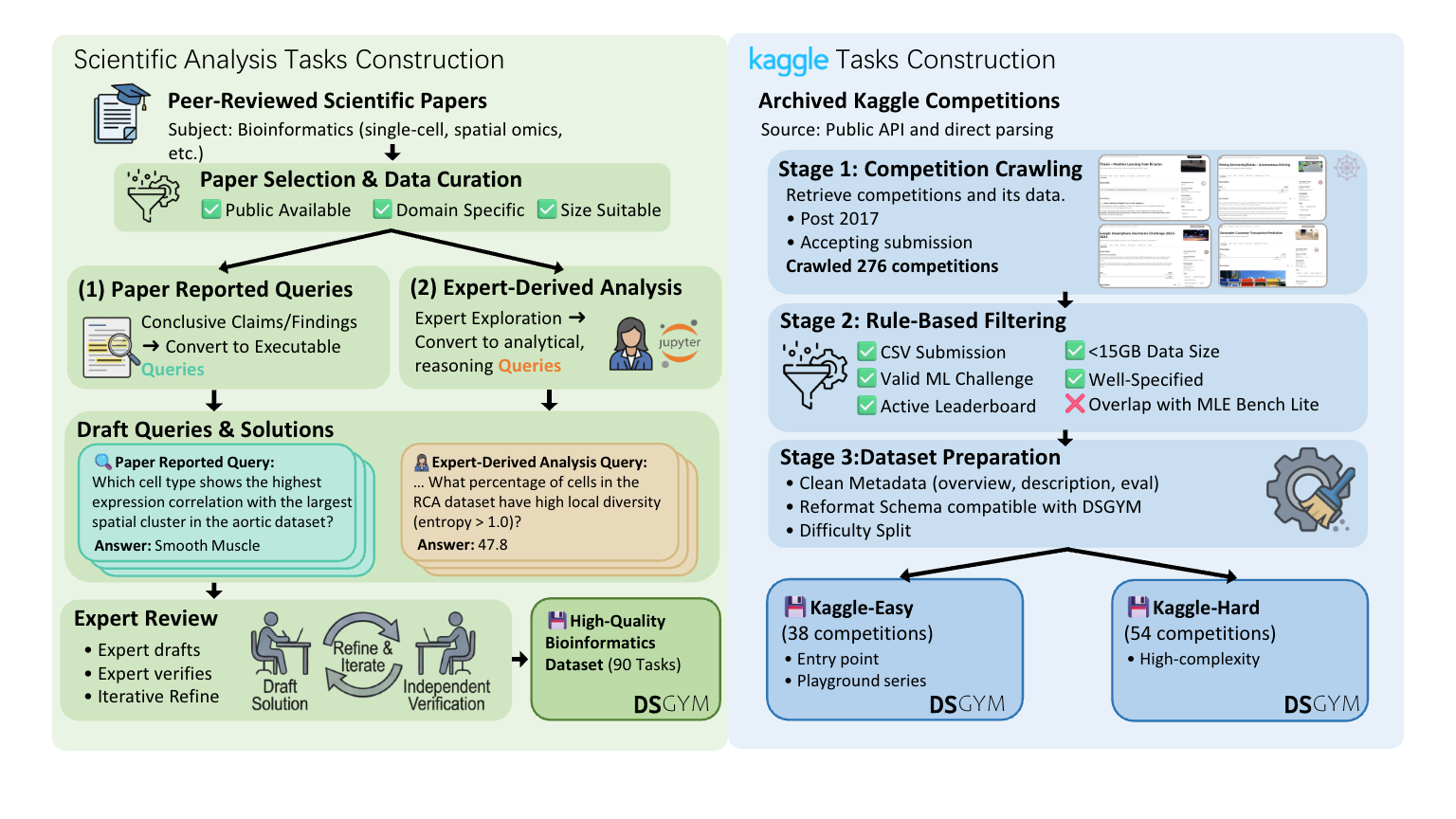

In addition to integrating established benchmarks like MLEBench and QRData, DSGym introduces original datasets. Specifically, we expand the general scope by creating two novel suites: DSBio (90 bioinformatics tasks from academic literature probing domain-specific workflows) and DSPredict (92 Kaggle competitions spanning time series, computer vision, molecular property prediction, and single-cell perturbation). The next figure summarizes our creation process for these two suites:

To support task execution and data generation, DSGym provides a data generation pipeline to execute queries and generating trajectories, turning the framework into a data factory that can effectively train models.

Using this pipeline, we generated 3,700 synthetic queries. After applying LLM-based quality filtering, we obtained 2,000 high-quality query-trajectory pairs for supervised finetuning. Our results (presented next) demonstrate that these data can be an effective way to improve model performance on data science tasks, even for small models.

Results

We present here our main findings. Additional results are available in the paper.

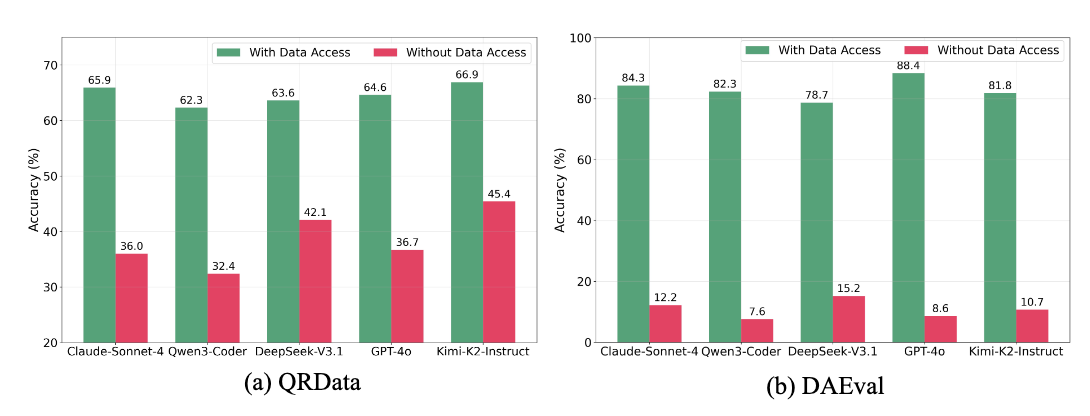

Addressing the memorization gap

A first and important result concerns memorization. We observe that many existing benchmark queries provide weak signals: a non-trivial fraction remains solvable even without data file access, suggesting LLMs might have learned about these tasks during training.

Thus, we made sure to flag and exclude these examples that are likely in the training sets of the models. DSGym applies quality filtering and prompt-only shortcut filtering to remove such tasks, producing refined datasets: DAEval-Verified, QRData-Verified, DABStep, and MLEBench-Lite.

Benchmark performance & failure Mmodes

After creating these new benchmarks, we test frontier proprietary and open-weight LLMs across general-purpose data science and domain-specific scientific tasks.

Our trained 4B model (Qwen3-4B-DSGym-SFT-2k) achieves competitive performance with much larger models on general analysis benchmarks.

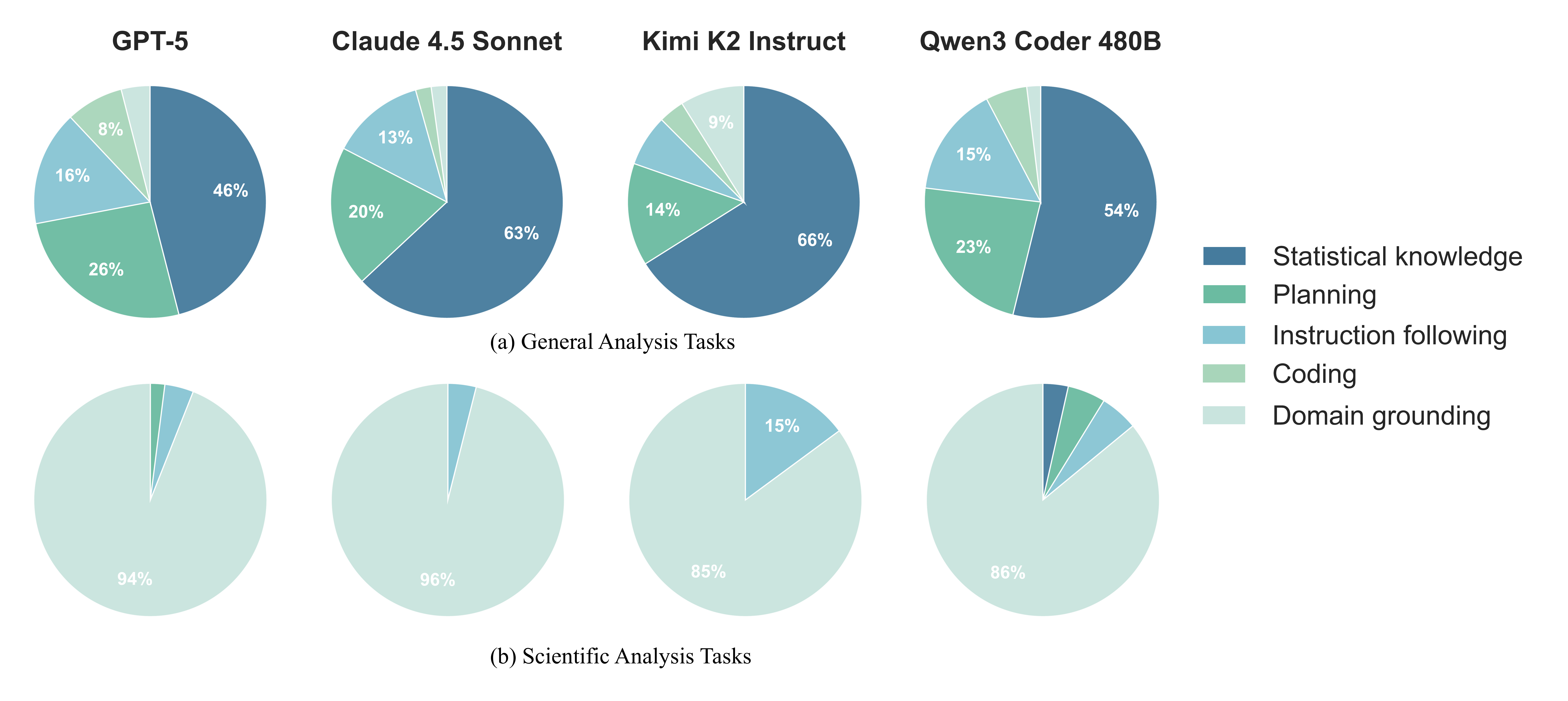

Interestingly, most models are still far from getting perfect scores on these benchmarks. To understand why models fail on these tasks, we conducted a manual error analysis of 50 randomly sampled failed trajectories per model and task family. This analysis reveals an interesting pattern: while general analysis tasks show diverse failure modes, with statistical knowledge gaps and planning errors being most common, scientific analysis tasks are dominated by a single failure mode.

Data prediction performance

DSPredict evaluates the ability of agents to build complete machine learning pipelines—from raw data to a final model—mimicking the complexity of Kaggle competitions.

We evaluate models on DSPredict-Easy and DSPredict-Hard splits. Performance is measured by:

- Valid Submission: Successful generation of a correctly formatted output file.

- Median/Percentile: Performance relative to the original competition leaderboard.

- Medal: Achieving score thresholds equivalent to Bronze, Silver, or Gold medals.

We use a simple CodeAct like scaffold. Each agent is given a time limit of 10 hours for total time and 2 hours for each code execution.

Our analysis of the DSPredict results reveals several critical insights into the current capabilities and limitations of LLM agents in end-to-end ML workflows.

High reliability, low competitiveness: While frontier models (like GPT-5.1 and Claude 4.5) are excellent at creating functional pipelines—achieving over 85% valid submission rates—they struggle to be competitive. Very few models can consistently beat the human median on "Hard" tasks.

A major bottleneck is the tendency for models to choose the path of least resistance. When faced with technical friction or complex data, agents often default to simple baselines or "safe" heuristics rather than pursuing high-performance modeling strategies.

Reasoning vs. scale: High-reasoning models (GPT-5.1-high) show a significant lead, suggesting that the "skeptical" persistence required for data science—tuning, validating, and iterating—is currently a more critical factor than raw parameter count.

Conclusion

DSGym provides a unified framework for evaluating and training data science agents. We expose a fundamental challenge in current approaches: models rely heavily on memorization for general tasks while failing to ground their analysis in domain knowledge for scientific problems.

By offering standardized benchmarks spanning both task types, DSGym enables systematic investigation of how to build agents that truly reason about data rather than recall patterns. We also release a capable open source data science agent that’s easy to develop and deploy. We hope this resource accelerates progress toward more reliable and generalizable data science automation.

LOREM IPSUM

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

LOREM IPSUM

Audio Name

Audio Description

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

Value Prop #1

Body copy goes here lorem ipsum dolor sit amet

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

Value Prop #1

Body copy goes here lorem ipsum dolor sit amet

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

Value Prop #1

Body copy goes here lorem ipsum dolor sit amet

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

List Item #1

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

List Item #1

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

List Item #1

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

List Item #1

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

List Item #2

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

List Item #3

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

Build

Benefits included:

✔ Up to $15K in free platform credits*

✔ 3 hours of free forward-deployed engineering time.

Funding: Less than $5M

Grow

Benefits included:

✔ Up to $30K in free platform credits*

✔ 6 hours of free forward-deployed engineering time.

Funding: $5M-$10M

Scale

Benefits included:

✔ Up to $50K in free platform credits*

✔ 10 hours of free forward-deployed engineering time.

Funding: $10M-$25M

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, respond only in Arabic, no other language is allowed. Here is the question:

Natalia sold clips to 48 of her friends in April, and then she sold half as many clips in May. How many clips did Natalia sell altogether in April and May?

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, respond with less than 860 words. Here is the question:

Recall that a palindrome is a number that reads the same forward and backward. Find the greatest integer less than $1000$ that is a palindrome both when written in base ten and when written in base eight, such as $292 = 444_{\\text{eight}}.$

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, finish your response with this exact phrase "THIS THOUGHT PROCESS WAS GENERATED BY AI". No other reasoning words should follow this phrase. Here is the question:

Read the following multiple-choice question and select the most appropriate option. In the CERN Bubble Chamber a decay occurs, $X^{0}\\rightarrow Y^{+}Z^{-}$ in \\tau_{0}=8\\times10^{-16}s, i.e. the proper lifetime of X^{0}. What minimum resolution is needed to observe at least 30% of the decays? Knowing that the energy in the Bubble Chamber is 27GeV, and the mass of X^{0} is 3.41GeV.

- A. 2.08*1e-1 m

- B. 2.08*1e-9 m

- C. 2.08*1e-6 m

- D. 2.08*1e-3 m

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, your response should be wrapped in JSON format. You can use markdown ticks such as ```. Here is the question:

Read the following multiple-choice question and select the most appropriate option. Trees most likely change the environment in which they are located by

- A. releasing nitrogen in the soil.

- B. crowding out non-native species.

- C. adding carbon dioxide to the atmosphere.

- D. removing water from the soil and returning it to the atmosphere.

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, your response should be in English and in all capital letters. Here is the question:

Among the 900 residents of Aimeville, there are 195 who own a diamond ring, 367 who own a set of golf clubs, and 562 who own a garden spade. In addition, each of the 900 residents owns a bag of candy hearts. There are 437 residents who own exactly two of these things, and 234 residents who own exactly three of these things. Find the number of residents of Aimeville who own all four of these things.

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, refrain from the use of any commas. Here is the question:

Alexis is applying for a new job and bought a new set of business clothes to wear to the interview. She went to a department store with a budget of $200 and spent $30 on a button-up shirt, $46 on suit pants, $38 on a suit coat, $11 on socks, and $18 on a belt. She also purchased a pair of shoes, but lost the receipt for them. She has $16 left from her budget. How much did Alexis pay for the shoes?

article