The Together AI Platform

Accelerate training, fine-tuning and inference on performance-optimized GPU clusters

Reliable at production scale

Built for scale, with customers going to trillions of tokens in a matter of hours without any depletion in experience.

Industry-leading unit economics

Continuously optimizing across inference and training to keep improving performance, delivering better total cost of ownership.

Frontier AI systems research

Proven infra and research teams ensure the latest models, hardware, and techniques are made available on day 1.

Full stack development for AI‑native apps

Model Library

Model Library

Evaluate and build with open-source and specialized models for chat, images, videos, code, and more.

Migrate from closed models with OpenAI-compatible APIs.

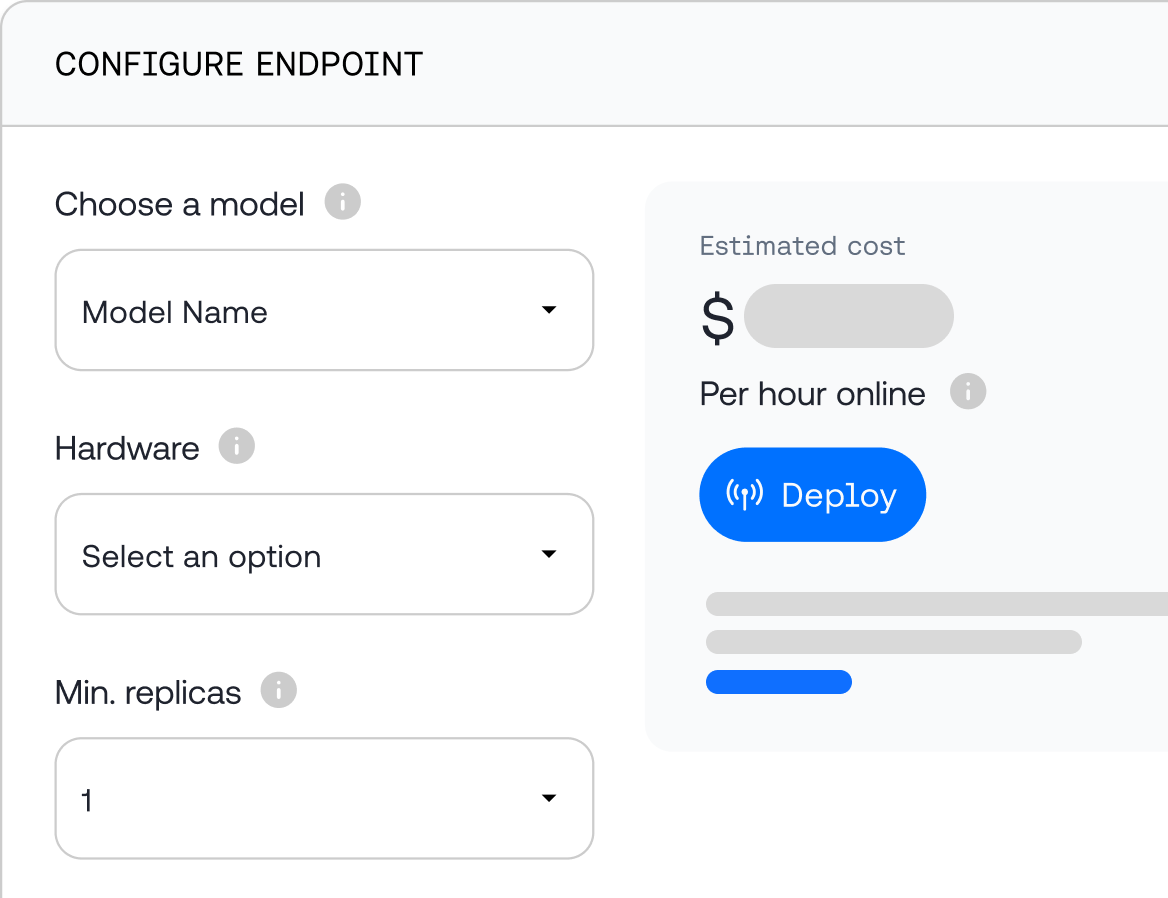

Inference

Inference

Reliably deploy models with unmatched price-performance at scale. Benefit from inference-focused innovations like the ATLAS speculator system and Together Inference Engine.

Deploy on hardware of choice, such as NVIDIA GB200 NVL72 and GB300 NVL72.

Fine-Tuning

Fine-Tuning

Fine-tune open-source models with your data to create task-specific, fast, and cost-effective models that are 100% yours.

Easily deploy into production through Together AI's highly performant inference stack.

Pre-Training

Pre-Training

Securely and cost effectively train your own models from the ground up, leveraging research breakthroughs such as Together Kernel Collection (TKC) for reliable and fast training.

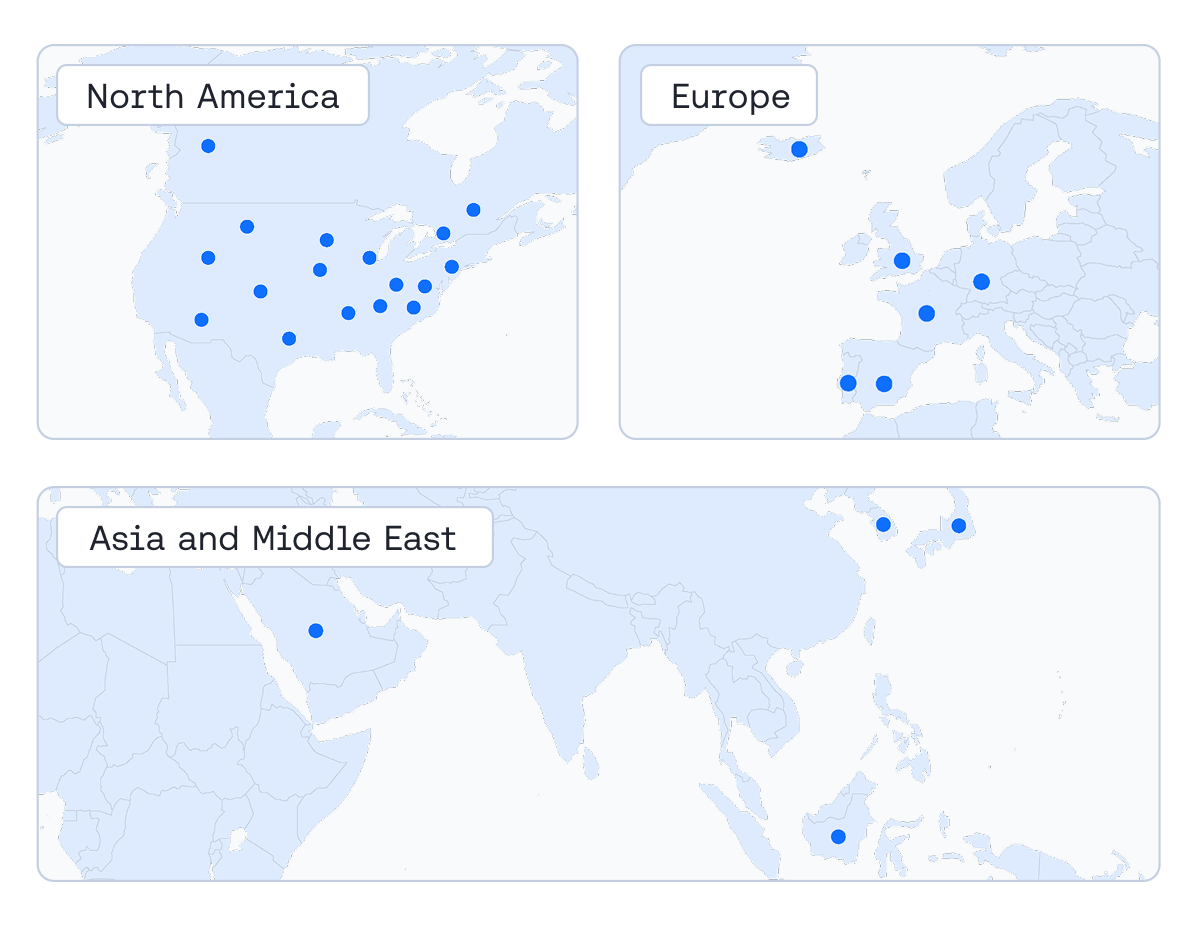

GPU Clusters

GPU Clusters

Scale globally with our fleet of data centers (DCs) across the globe.

These DCs feature frontier hardware such as NVIDIA GB200 NVL72 and GB300 NVL72.

Developers can go from self-serve instant clusters to custom AI factories for high-scale workloads.

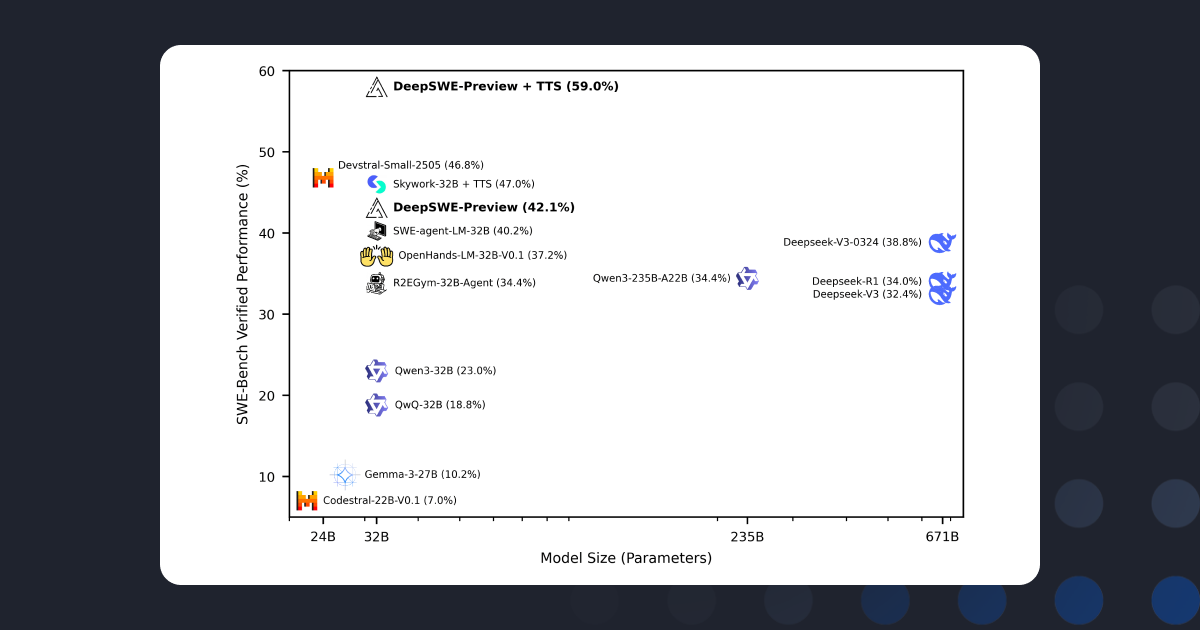

Industry leading AI research and open-source contributions

FlashAttention

Mixture of Agents

Dragonfly

Red Pajama Datasets

DeepCoder

Open Deep Research

Flash Decoding

Open Data Scientist Agent

Proven results

Get to market faster and save costs with breakthrough innovations

Faster

Inference3.5x

Faster

Training2.3x

Lower

Cost20%

Network

Compression117x

.webp)

.jpg)